Twitter has conducted new research into the effectiveness of its warning prompts on potentially offensive tweet replies, which it first rolled out in 2020, then re-launched last year, as a means to add a level of friction, and consideration, into the tweet process.

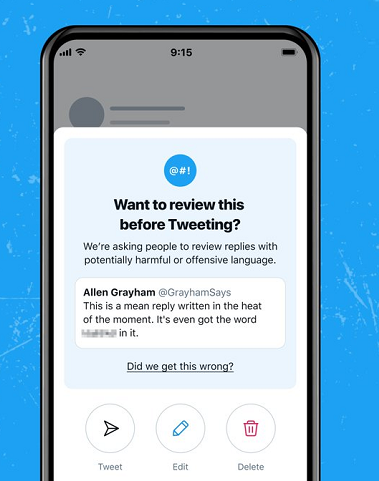

Twitter’s warning prompts use automated detection to pick up any likely offensive terms within tweet replies, which then triggers this alert to add a moment of hesitation in the process.

Back in February, Twitter reported that in 30% of cases where users were shown these prompts, they did in fact end up changing or deleting their replies, in order to avoid possible misinterpretation or offense.

Now, Twitter’s taken a deeper dive into the process to determine the true value of the alerts.

As per Twitter:

“While it was clear that prompts cause people to reconsider their replies, we wanted to know more about what else happens after an individual sees a prompt. To understand this, we conducted a follow-up analysis to look at how prompts influence positive outcomes on Twitter over time. Today, we are publishing a peer-reviewed study of over 200,000 prompts conducted in late 2021. We found that prompts influence positive short and long-term effects on Twitter. We also found that people who are exposed to a prompt are less likely to compose future offensive replies.”

It’s amazing what a simple step added between thought and tweet can do.

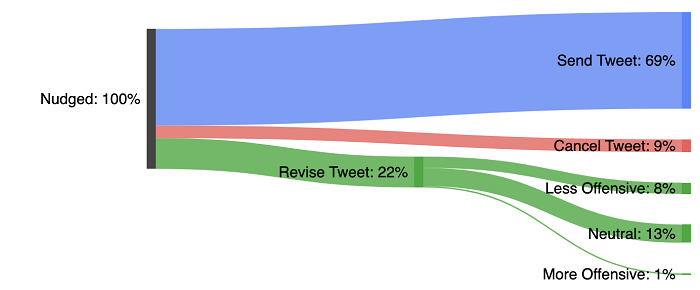

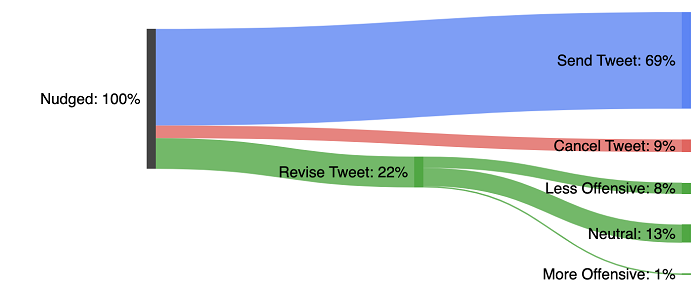

According to Twitter’s research, for every 100 instances where these prompts are displayed (on average)

- 69 tweets were sent without revision

- 9 tweets were not sent

- 22 were revised

Those findings are in line with the 30% figure above, but it’s interesting to note the more granular detail here, and how exactly the prompts have changed user behaviors as a result.

But more than this, Twitter also found that the prompts can have ongoing behavioral impacts in the app.

“We also found the effects of being presented with a prompt extended beyond just the moment of posting. We saw that, after just one exposure to a prompt, users were 4% less likely to compose a second offensive reply. Prompted users were also 20% less likely to compose five or more prompt-eligible Tweets”

So, while 4% may not seem overly significant (though at Twitter’s scale, the actual numbers in this context could be big), the ongoing effect is that users end up becoming more considerate in their responses.

Or they just get smarter at using terms that aren’t going to trigger Twitter’s warning.

In addition to this, the researchers also found that prompted users received fewer offensive replies themselves.

“The proportion of replies to prompt-eligible tweets that were offensive decreased by 6% for prompted users. This represents a broader and sustained change in user behavior and implies that receiving prompts may help users be more cognizant of avoiding potentially offensive content as they post future Tweets.”

Again, 6% may seem like a small fraction, but with some 500 million tweets sent every day, the raw number here could be significant.

Of course, this only relates to tweets that trigger a warning, which would only be a small amount of actual tweet activity. But it is interesting to consider the impacts of these warning prompts, and how small nudges like this can alter user behavior.

On face value, the results show that Twitter’s offensive reply warnings could serve as an educational tool in guiding more consideration, which, on a broader scale, could help to improve on-platform discourse over time.

But the bigger takeaway is that there are ways to help re-align user behaviors towards more positive engagement, which could be a key step in reducing angst and division, as it’s often unintended, or lost in translation, via text communications that lack conversational nuance.

That’s an interesting consideration for future platform updates in this respect. And while expanding such prompts into new areas, or making them more sensitive, could be difficult, it does show that misunderstandings are a common element in online debate.

The truth is, in person, many of the people you disagree with online wouldn’t be anywhere near as argumentative or confrontational. If only we could translate more of those in-person characteristics to online chatter – but in terms of immediate response and action, it’s worth taking a moment to consider that the person sending that tweet, in at least some cases, hasn’t intentionally sought to offend or confront you in this way.

In other words, Twitter isn’t real life. People love controversy, and get caught up in passionate debate. But really, it’s probably just some lonely person trying to find connection.

The less personal you take it, the better it is for your mental health.

You can read Twitter’s full study here.