Visual elements are the main focus of next-level digital experiences, like AR and VR tools, but audio also plays a key role in facilitating fully immersive interaction, with the sounds that you hear helping to transport your mind, and bring virtual environments to life.

Which is where Meta’s latest research comes in – in order to facilitate more true-to-life AR and VR experiences, Meta’s developing new spatial audio tools which respond to different environments as displayed within visuals.

As you can see in this video overview, Meta’s work here revolves around the commonalities of sound that people expect to experience in certain environments, and how that can be translated into digital realms.

As explained by Meta:

“Whether it’s mingling at a party in the metaverse or watching a home movie in your living room through augmented reality (AR) glasses, acoustics play a role in how these moments will be experienced […] We envision a future where people can put on AR glasses and relive a holographic memory that looks and sounds the exact way they experienced it from their vantage point, or feel immersed by not just the graphics but also the sounds as they play games in a virtual world.”

That could make its coming metaverse much more immersive, and could actually play a far more significant role in the experience than you might initially expect.

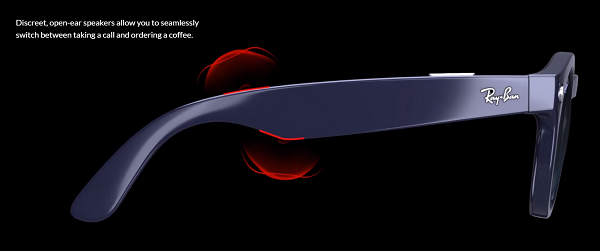

Meta’s already factored this in, to at least some degree, with the first generation version of its Ray-Ban Stories glasses, which include open air speakers that deliver sound directly into your ears.

Which is a surprisingly sleek addition – the way the speakers are positioned enables fully immersive audio without the need for earbuds. Which seems like it shouldn’t work, but it does, and it may already be a key selling point for the device.

In order to take its immersive audio elements to the next stage, Meta’s making three new models for audio-visual understanding open to developers.

“These models, which focus on human speech and sounds in video, are designed to push us toward a more immersive reality at a faster rate.”

Meta has already developed its own self-supervised visual-acoustic matching model, as outlined in the video clip, but by expanding the research here to more developers and audio experts, that could help Meta build even more realistic audio translation tools, to further build upon its work.

Which, again, could be more significant than you think.

As noted by Meta CEO Mark Zuckerberg:

“Getting spatial audio right will be one of the things that delivers that ‘wow’ factor in what we’re building for the metaverse. Excited to see how this develops.”

Similar to the audio elements in Ray-Ban Stories, that ‘wow’ factor may well be what gets more people buying VR headsets, which could then help to usher in the next stage of digital connection that Meta is building towards.

As such, it could end up being a major advance, and it’ll be interesting to see how Meta looks to build its spatial audio tools to enhance its VR and AR systems.