Hey, remember how a few years back, Meta previewed its plans to create brain reading technology via computer chips implanted into your skull?

That revelation, nestled inconspicuously at the end of its F8 conference in 2017, understandably freaked people out. I mean, Meta already knows all about what you share, who you engage with, what you’re interested in, which is enough to make very accurate predictions about your psychological make-up. But one day, it could literally be in your brain.

That seems not ideal.

Meta sought to ease concerns around such by explaining that its research was primarily related to medical use cases, and helping people with brain damage and paralysis speak again. But even so, the fact that Meta, and its team of data harvesters, had even considered this, is a concern on some level.

But, all good, Meta actually abandoned its plans for brain-reading tech last year, in favor of alternative control devices, like wrist-based electromyography, while Meta also sought to make it clear that it would no longer be looking to invade your brain.

So you can rest easy, right – Mark Zuckerberg is not coming for your brains, like a zombie hungry for data.

Except, he kind of still is.

Today, Meta has outlined a new way of reading people’s minds, and translating thoughts into speech – though this time without the use of brain-implanted chips.

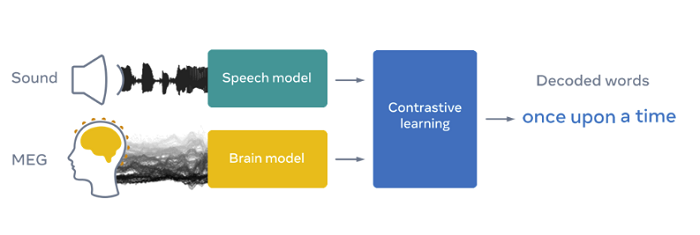

As you can see in this example, the new process utilizes EEG and MEG recordings, sourced via sensors on the head, to ‘read’ brain signals, and translate them into words.

Which is now producing solid results – as explained by Meta:

“From three seconds of brain activity, our results show that our model can decode the corresponding speech segments with up to 73 percent top-10 accuracy from a vocabulary of 793 words, i.e., a large portion of the words we typically use on a day-to-day basis.”

Yep, time to get scared again – Meta is after your inner-most thoughts after all.

Jokes aside, there are some significant, and highly valuable medical use cases for such technology:

“Every year, more than 69 million people around the world suffer traumatic brain injury, which leaves many of them unable to communicate through speech, typing, or gestures. These people’s lives could dramatically improve if researchers developed a technology to decode language directly from noninvasive brain recordings.”

Note the specific mention of ‘noninvasive’ here. Meta clearly thinks that the biggest concern people had was the brain implants, not the fact that this could provide Meta with direct access to your thoughts.

If Meta’s scientists can get this right, it could be a major medical breakthrough, which would be worth the effort either way. But it could also, eventually, be incorporated into a new version of a VR headset, which would be able to read your mind and respond to thought-based cues, facilitating new ways to engage in the theoretical metaverse.

So Meta might eventually be able to read your mind after all, and if the metaverse takes off like Zuck hopes it will, that could become a widely accepted, even valuable use case to improve the sense of presence in these digital environments.

Also, you could get increasingly targeted ads, based on subconscious leanings – which would spark a whole new wave of conspiracy theories and freak many, many people the heck out.

I imagine sales of remote farming properties, disconnected from technology, would skyrocket if that ever came to be.

If you have a ‘living off the grid’ plan in your back pocket, it might be time to revisit it – before Meta gets in there and erases any such thoughts, replacing them with happy sentiment about the metaverse.