Twitter’s looking to increase the value of its Community Notes, with a new feature that’ll enable Community Notes contributors to add a contextual note to an image in the app, which will then see Twitter’s system attach that note to any matching re-shares of the same image across all tweets.

From AI-generated images to manipulated videos, it’s common to come across misleading media. Today we’re piloting a feature that puts a superpower into contributors’ hands: Notes on Media

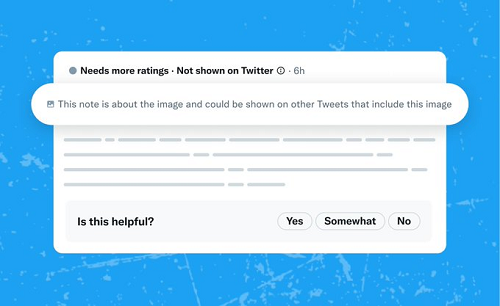

Notes attached to an image will automatically appear on recent & future matching images. pic.twitter.com/89mxYU2Kir

— Community Notes (@CommunityNotes) May 30, 2023

As you can see in this example, now, when a Community Notes contributor marks an image as questionable, and adds an explanatory note to it, that same note will be attached to all other tweets using the same image.

As explained by Twitter:

“If you’re a contributor with a Writing Impact of 10 or above, you’ll see a new option on some Tweets to mark your notes as ‘About the image’. This option can be selected when you believe the media is potentially misleading in itself, regardless of which Tweet it is featured in.”

Community Notes attached to images will include an explainer which clarifies that the note is about the image, not about the tweet content.

The option is currently only available for still images, but Twitter says that it’s hoping to expand it to videos and tweets with multiple images soon.

It’s a good update, which, as Twitter notes, will become increasingly important as AI-generated visuals spark new viral trends across social apps.

Images like this:

This AI-generated picture of the Pope in a puffer jacket prompted many to question whether it was real, which is a more light-hearted example of why such alerts could be of benefit in clarifying the actual origin of a picture within the tweet itself.

More recently, we’ve also seen examples of how AI-generated images can cause harm, with a digitally created picture of an explosion outside the Pentagon sparking a brief panic online, before further clarification confirmed that it wasn’t actually a real event.

That specific incident has likely prompted Twitter to take action on this front, and the use of Community Notes for this purpose could be a good way to maximize application to AI-enhanced photos at scale.

Though Community Notes, for all its benefits, remains a flawed system too, in regards to addressing online misinformation. The key issue with Community Notes is that they can only be applied after these visuals have been shared, and Twitter users have been exposed to them. And given the real-time nature of tweets, that delayed turnaround – in regards to applying a Community Note, having it approved, then seeing it appear on the tweet – could mean that tweets like the Pentagon example will continue to gain wide exposure in the app before such notes can be appended.

It would likely be faster for Twitter itself to take on the moderation in extreme cases, and remove that content outright. But that goes against Elon Musk’s more free speech-aligned approach, in which Twitter’s users will decide what is and is not correct, with Community Notes being the key lever in this respect.

That ensures that content decisions are dictated by the Twitter community, not Twitter management, while also reducing Twitter’s moderation costs – a win-win. The process makes sense, but in application, it could lead to various trends gaining traction before Community Notes can take effect.

Either way, this is a good addition to the Community Notes process, which will become more important as AI-generated content continues to take hold, and spark new forms of viral trends.