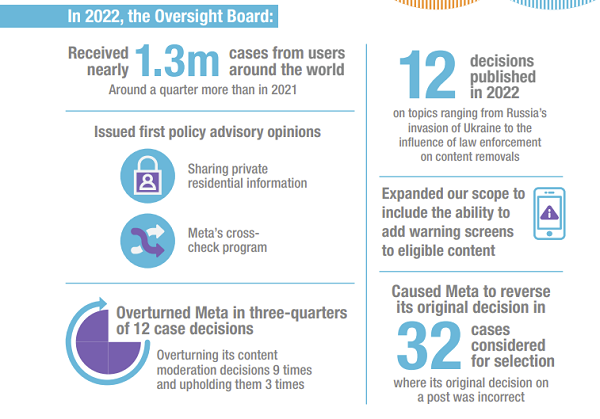

Meta’s Oversight Board has published its 2022 annual report, which provides an overview of all the cases that it’s reviewed, and the subsequent improvements in Meta’s systems that it’s been able to facilitate as a result, helping to provide more transparency into Meta’s various actions to enforce its content rules.

The Oversight Board is essentially an experiment in social platform regulation, and how platforms should refer to experts to refine their rules.

And on this front, you’d have to say it’s been a success.

As per the Oversight Board:

“From January 2021 through early April 2023, the Board made a total of 191 recommendations to Meta. For around two-thirds of these, Meta has either fully or partially implemented the recommendation, or reported progress towards its implementation. In 2022, it was encouraging to see that, for the first time, Meta made systemic changes to its rules and how they are enforced, including on user notifications and its rules on dangerous organizations.”

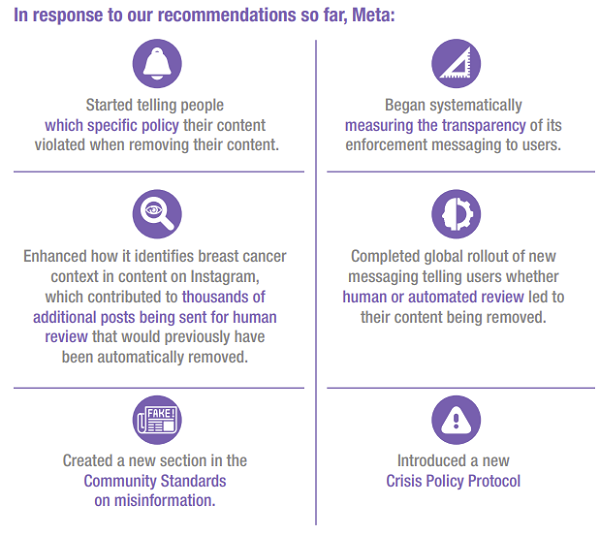

This has been a key focus for the Oversight Board, in facilitating more transparency from Meta in its content decisions, thereby giving users more understanding as to why their content was restricted or removed.

“In the past, we have seen users left guessing about why Meta removed their content. In response to our recommendations, Meta has introduced new messaging globally telling people the specific policy they violated for its Hate Speech, Dangerous Individuals and Organizations, and Bullying and Harassment policies. In response to a further recommendation, Meta also completed a global rollout of messaging telling people whether human or automated review led to their content being removed.”

This transparency, the Board says, is key in providing baseline understanding to users, which helps to alleviate angst, while also combating conspiracy theories around how Meta makes such decisions.

Which is true in almost any setting. In the absence of clarity, people will try to come up with their own explanation, and for some, that eventually leads to more far-fetched theories around censorship, authoritarian control, or worse. The best way to avoid such is to provide more clarity, something that Meta logically struggles with at such a huge scale, but simple explainer elements like these could go a long way towards building a better understanding of its processes.

Worth noting, too, that Twitter is also now looking to provide more insight into its content actions to address the same.

The Oversight Board also says that its recommendations have helped to improve protections for journalists and protesters, while also establishing better pathways for human review of content that would previously have been banned automatically.

It’s interesting to note the various approaches here, and what they may mean in a broader social media context.

As noted, the Oversight Board experiment is essentially a working model for how broad-scale social media regulation could work, by inviting the input of outside experts to review any content decision, thus taking those calls out of the hands of social platforms execs.

Ideally, the platforms themselves would prefer to allow more speech, to facilitate more usage and engagement. But in cases where there needs to be a line drawn, right now, each app is making its own calls on what it and is not acceptable.

The Oversight Board is an example of how it could, and why this should be done via a third party group – though thus far, no other platform has adopted the same, or sought to build on Meta’s model for such.

Based on the findings and improvements listed, there does seem to be merit in this approach, ensuring more accountability and transparency in the calls being made by social platforms on what can and cannot be shared.

Ideally, a similar, global group could be implemented for the same, with oversight across all social apps, but regional variances and restrictions likely make that an impossible goal.

But maybe, a US-based version could be established, with the Oversight Board model showing that this could be a viable, valuable way forward in the space.

You can read the Oversight Board’s 2022 annual report here.