Google has announced two new AI-powered ad campaign options, while it’s also launched a new form of AR Try-On process, also powered by AI, that’ll provide more accurate visualizations of how clothing looks on you, based on your body type.

First off, on the new ad types – Google’s launched Demand Gen and Video View campaign options, which will provide additional ways to utilize AI in your creation and targeting process.

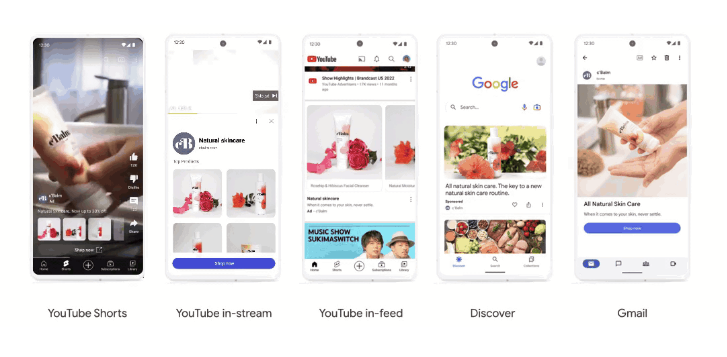

Demand Gen campaigns enable brands to maximize the use of their video and image assets, by translating them into different Google ad formats, in order to maximize reach and appeal with specific users.

As explained by Google:

“With Demand Gen, your best-performing video and image assets are integrated across our most visual, entertainment-focused touchpoints – YouTube, YouTube Shorts, Discover and Gmail. These products reach over 3 billion monthly users as they stream, scroll and connect.”

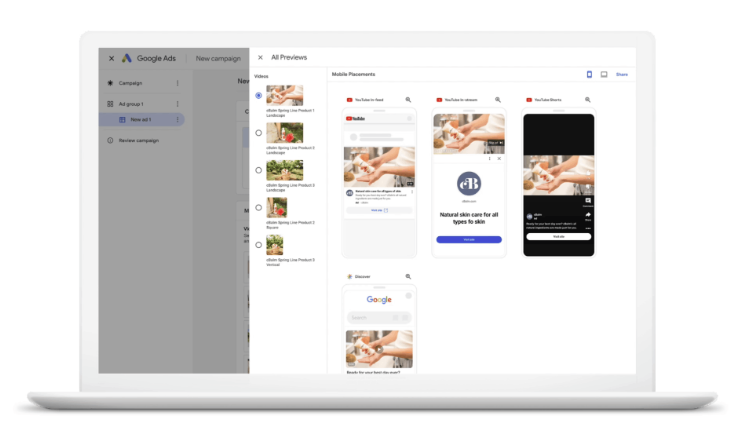

The Demand Gen process enables you to manage your campaigns across each surface, with your creative elements re-used across each format.

You can then target these by using Google’s AI, or lookalike segments based on your audience lists.

The process should enable you to get the most out of your creative assets, while also optimizing targeting for optimal response.

Google’s also launching new Video View campaigns, which will enable brands to maximize views across in-stream, in-feed, and YouTube Shorts, all within a single campaign.

“In early testing, Video View campaigns have achieved an average of 40% more views compared to in-stream skippable cost-per-view campaigns.”

Google’s evolving AI elements essentially enable better targeting and reach, with less manual effort, and it could be worth experimenting with these new options to see what kind of results you get.

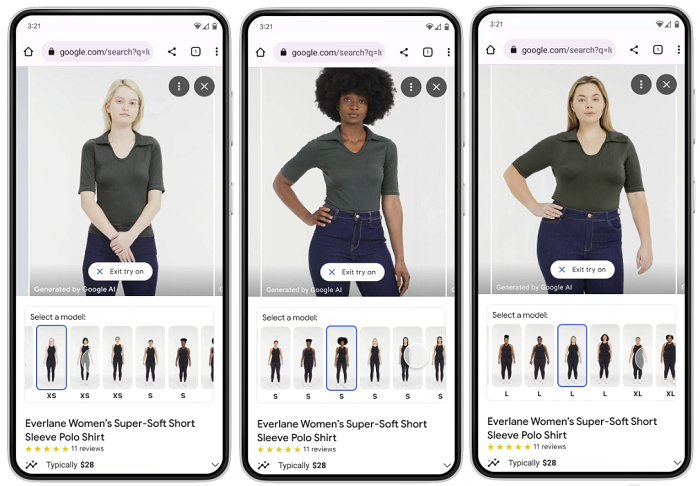

On another front, Google’s also looking to improve the online shopping experience with a new generative AI process for trying on clothing, with digital models that can better represent your body type.

As per Google:

“Virtual try-on for apparel shows you how clothes look on a variety of real models. Here’s how it works: Our new generative AI model can take just one clothing image and accurately reflect how it would drape, fold, cling, stretch and form wrinkles and shadows on a diverse set of real models in various poses. We selected people ranging in sizes XXS-4XL representing different skin tones (using the Monk Skin Tone Scale as a guide), body shapes, ethnicities and hair types.”

That’ll make it easier to get more insight into how clothing will look on you specifically, which could be a big step in facilitating expanded AR Try-On options, and improving the online shopping experience.

“Starting today, US shoppers can virtually try on women’s tops from brands across Google, including Anthropologie, Everlane, H&M and LOFT. Just tap products with the ‘Try On’ badge on Search and select the model that resonates most with you.”

Google says that this will be expanded to more products and models over time.

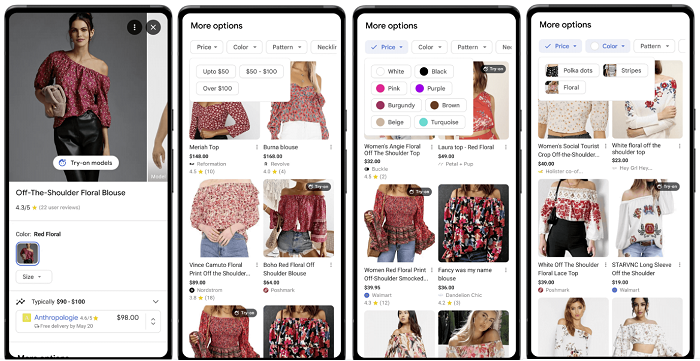

Finally, Google’s also added new ‘guided refinements’ to product searches, which will enable you to add additional qualifiers to product searches in the app.

As you can see, with these new tools, you’ll be able to hone in your results based on price, color, pattern options, and more.

The matches are based on machine learning and new visual matching algorithms, which is another way that Google’s looking to integrate advancing AI options into its Search tools – not as a replacement for traditional search, but as a complement to the process, to help improve utility.

Which is important for Google to highlight, because right now, a lot of the industry chatter is based around how generative AI will eventually render Google search obsolete, by enabling more semantic search options, and providing more human-like answers via conversational AI. It’s too early to predict the full impact in this respect, but there is a case to be made that simplified AI interactions will supplant at least some Google search traffic, which could be a major problem for Google if it fails to keep up with the latest trends.

That’s why Google’s developing its own AI models, and rolling out its own generative AI features.

Updates like these are another step in that direction, as it continues to evolve with behavioral shifts.