X Corp’s looking to fight back against claims that instances of hate speech have increased across the platform since Elon Musk purchased the app, by suing The Centre for Countering Digital Hate (CCDH) over various reports published by the group that track the rise of harmful content.

The CCDH has published several reports in which it claims to have tracked the rise of hate speech in the app since Musk’s purchase of the platform. Back in December, the group reported that slurs against Black and transgender people had significantly increased after Musk took over at the app, while its research also suggests that Twitter is not enforcing rule-breaking tweets posted by Twitter Blue subscribers, while it’s also allowing tweets that reference the LGBTQ+ community alongside ‘grooming’ slurs to remain active.

Some of this seemingly aligns with the app’s new ‘Freedom of Speech, Not Reach’ approach, which sees the X team now leaning more towards leaving tweets up, as opposed to removing them. But even so, X is now looking to counter the CCDH’s claims in court, as Musk seeks to uncover who’s behind the group.

As per the CCDH:

“Last week we got a letter from Elon Musk’s X. Corp threatening CCDH with legal action over our work, exposing the proliferation of hate and lies on Twitter since he became the owner. Elon Musk’s actions represent a brazen attempt to silence honest criticism and independent research in the desperate hope that he can stem the tide of negative stories and rebuild his relationship with advertisers.”

That does indeed seem to be the key motivation here, countering highly publicized claims that the platform is now less brand-safe than it has been in the past, in the hopes of reassuring ad partners.

But there is also some validity to X disputes, with Meta also previously criticizing the CCDH’s findings as being limited in scope, and therefore not indicative of its overall performance in mitigating hate speech.

Which is an inevitable limitation of any third-party assessment. Outside groups can only access a certain amount of posts and examples, so any such review will only be relative to the content that they choose to include in their study pool. In the cases of both Meta and X, the companies have claimed that the CCDH reports are too limited to be indicative, and therefore any conclusions that they make should not be considered valid as examples of their broader performance.

But that hasn’t stopped such reports from gaining widespread media coverage, which has likely had an impact on X’s business. The more reports of hate speech and harmful content, the more brand partners will be hesitant to advertise in the app, which is what Musk and his legal team are now looking to fight back against by taking the group to court.

In response, the CCDH has vowed to stand by its claims, labeling the letter from Musk’s lawyers ‘a disturbing effort to intimidate those who have the courage to advocate against incitement, hate speech and harmful content online’. The CCDH has also countered that Musk has deliberately sought to restrict outside research, by changing the rules around third-party data access, and as such, there’s no way to conduct a full-scale analysis of the platform’s content, which would be in line with the expectation set out in the letter.

Musk and his X team have increased the cost of API access at the platform, including for academic groups, which does indeed restrict such analysis, pretty much stamping it out completely in most cases, which means that the only true source of insight in this respect would be the data that X produces itself.

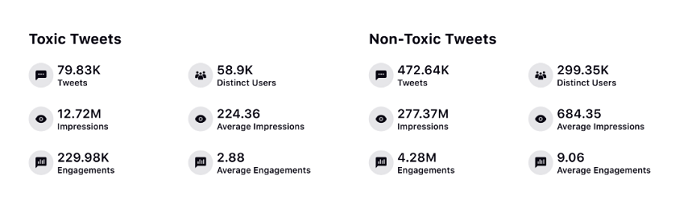

On that front, Musk and the X team have repeatedly claimed that hate speech impressions are way down since Musk took over at the app, with its most recent claim on this front being:

We remain committed to maintaining free speech on Twitter, while equally maintaining the health of our platform. Today, more than 99.99% of Tweet impressions are from healthy content, or content that does not violate our rules.

Read more about our progress on our enforcement…

— Safety (@Safety) July 12, 2023

As we’ve reported previously, that’s an unbelievably high number, but Musk and his team are seeking to combat any counter claims, despite producing no data to support such statements, in the hopes of mitigating advertiser concerns.

Which, really, X could do. Musk and his team could publish a detailed report on hate speech which clearly details their actual enforcement actions, and how this 99.99% figure was established. That would be the most definitive counter to the CCDH claims, but that also seems unlikely to happen.

Because there’s no way that 99.99% of tweet impressions are from ‘healthy content’.

Part of the argument here lies in how the X team is interpreting such comments and mentions, with the X team and their assessment partners changing the definitions around what qualifies as hate speech.

For example, X’s assessment partner Sprinklr has previously outlined how its systems now take a more nuanced approach to assessing hate speech, by analyzing the context within which identified hate terms are used, as opposed to just tallying mentions.

As per Sprinklr:

“Sprinklr’s toxicity model analyzes data and categorizes content as ‘toxic’ if it’s used to demean an individual, attack a protected category or dehumanize marginalized groups. Integrating factors such as reclaimed language and context allowed our model to eliminate false positives and negatives as well.”

In other words, many times, hate speech terms are not used in a hateful way, and Sprinklr’s assessment processes are now more attuned to this.

Based on this, back in March, Sprinklr found that 86% of X posts that included hate speech terms were not actually considered harmful or intended to cause harm.

Which, again, is an incredibly high number. Based on a list of 300 English-language slur terms, this assessment suggests that 86% of the time, these terms are not used in a negative or harmful way.

That seems like it can’t be right, but again, the actual data hasn’t been provided, so there’s no way to counter such claims.

Which seems to be what X is pushing for, to combat outside assessment, without providing its own counter insights, other than via basic overviews, and the hope that people will simply take the company at its word.

Worth noting too that it’s not just the CCDH that’s reported an increase in hate speech in the app since Musk took over, but Musk looks to be taking aim at the CCDH specifically, based on who’s funding the group and suspicion about their aims.

But again, X could counter this by producing its own data, which it claims to have. The above figures came from somewhere, why not produce the full report which led to this overview and show, in detail, the counter claims?

It seems like a simple counter to refute such claims, as opposed to heading to court. Which lends credibility to the CCDH’s claims that this is an attempt to intimidate, as opposed to clarify.