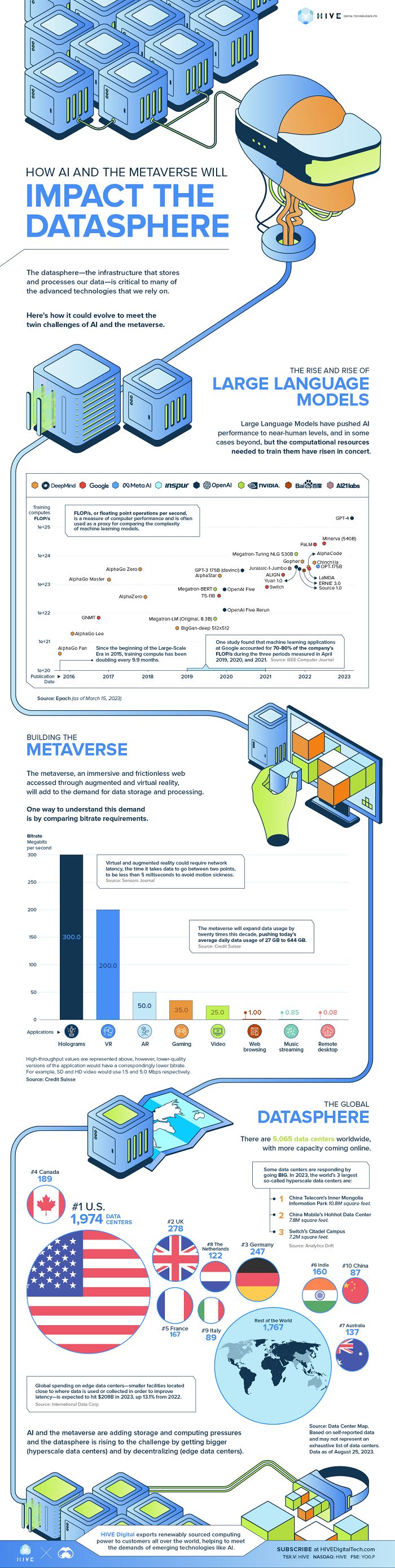

While AI and large language models (LLMs) become more commonplace, it’s worth considering the amount of computational power, and data storage, that these systems require to operate.

Demand for high-grade GPUs, for example, is still exceeding demand, as more tech companies and investors look to muscle in, while the big players continue to build on their data center capacity, in order to beat smaller systems out of the market.

That, inevitably, means that control over many of these new processes will eventually fall to those with the most money, and even if you have concerns about next-level computational power being governed by CEOs and corporations, there’s not a heap that you can do about it, as they need an established holding to even get in.

Well, unless a government steps in and seeks to build its own infrastructure in order to facilitate AI development, though that seems unlikely.

And it’s not just AI, with crypto processes, complex analysis, and advanced scientific discovery now largely reliant on a few key providers that have available capacity.

It’s a concern, but essentially, you can expect to see a lot more investment in big data centers and processing facilities over the coming years.

This new overview from Visual Capitalist (for Hive Digital) provides some additional context. Here, the VC team have broken down the current data center landscape, and what we’re going to need to facilitate next-level AI, VR, the metaverse, and more.

It’s an eye-opening summary. You can check out Visual Capitalists’ full overview here.