Instagram’s testing out another way to protect users from unwanted content exposure in the app, this time via DMs, with a new nudity filter that would block likely nudes in your IG Direct messages.

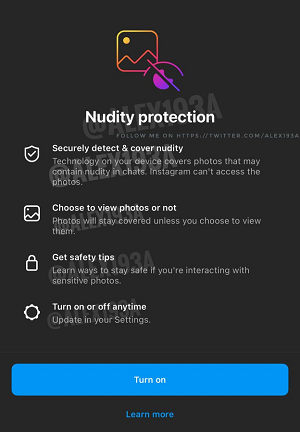

As outlined in this feature overview, uncovered by app researcher Alessandro Paluzzi, the new ‘nudity protection’ option would enable Instagram to activate the nudity detection element in iOS, released late last year, which scans incoming and outgoing messages on your device to detect potential nudes in attached images.

Where detected, the system can then blur the image, as Instagram notes – which, importantly, means that Instagram, and parent company Meta, would not be downloading and analyzing your emails for this feature, it would all be done on your device.

Of course, that still seems slightly concerning, that your OS is checking your messages, and filtering such based on the content. But Apple has worked to reassure users that it’s also not downloading the actual images, and that this is all done via machine learning and data matching, which doesn’t trace or track the specifics of your personal interactions.

Still, you’d imagine that, somewhere, Apple is keeping tabs on how many images it detects and blurs through this process, and that could mean that it has stats on how many nudes you’re likely being sent. Not that that would mean anything, but it could feel a little intrusive if one day Apple were to report on such.

Either way, the potential safety value may outweigh any such concerns (which are unlikely to ever surface), and it could be another important measure for Instagram, which has been working to implement more protection measures for younger users.

Last October, as part of the Wall Street Journal’s Facebook Files expose, leaked internal documents were published which showed that Meta’s own research points to potential concerns with Instagram and the harmful mental health impacts it can have on teen users.

In response, Meta has rolled out a range of new safety tools and features, including ‘Take a Break’ reminders and updated in-app ‘nudges’, which aim to redirect users away from potentially harmful topics. It’s also expanded its sensitive content defaults for young users, with all account holders under the age of 16 now being put into its most restrictive exposure category.

In combination, these efforts could go a long way in offering important protective measures for teen users, with this additional nude filter set to add to this, and further underlining Instagram’s commitment to safety in this respect.

Because whether you like it or not – whether you understand it or not – nudes are an element of the modern interactive process. Though they’re not always welcome, and this could be a simple, effective means to limit exposure to such.