Among the key concerns around Elon Musk’s takeover at Twitter has been the perceived relaxation of past regulations around hate speech, misinformation, and other concerning elements.

Musk, who strongly supports allowing all kinds of speech, whether it’s personally objectionable or not, has overseen the reinstatement of tens of thousands of accounts that were previously banned by Twitter management, while he’s also removed restrictions designed to curb COVID misinformation, canceled warning labels on Chinese and Russian state media content, while also, himself, promoting various conspiracy theories to his 134 million followers.

It’s changes like these that have reportedly seen many Twitter advertisers shun the app, due to concerns around potential association with hate speech and offensive material – but is hate speech actually on the rise on Twitter 2.0, or is it, as Musk and his team claim, actually reducing due to updated processes for detecting and limiting such in the app?

This was the key point of contention to come out of Musk’s interview with the BBC this week, which Musk broadcast live via Twitter Spaces. Overall, the almost two-hour long interview didn’t provide any new insight – Musk discussed his rushed lay-offs at the app and the need to cut costs to save the company, Musk claimed that his dog is now the CEO of Twitter, and said that Twitter could possibly breakeven within months.

But hate speech, and the impact that it’s had on advertisers, was a clear sore point, with Musk sharing this exchange to highlight what he perceives as media bias around this element.

Of course, one user’s personal experience is not indicative of the scope of the potential problem, if there is one – though as noted, Musk and his team claim that hate speech is actually way down since he took over at the app.

Could that be true? Again, following the reinstatement of so many previously banned accounts, many of which were shut down due to violating the platform’s hate speech rules, it seems like this element can’t have reduced. So how are Musk and Co. coming up with these stats then – and what are the studies being referred to by the BBC in relation to the rise in hateful content?

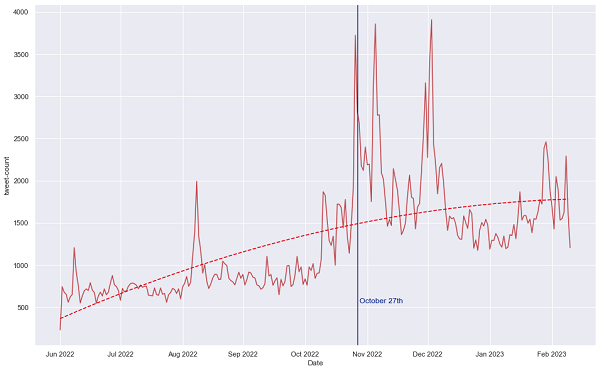

First off, on the external research, which reportedly shows that hate speech has increased. As referred to in the BBC interview, the Institute for Strategic Dialogue (ISD) released a study last month which it says shows that the volume of antisemitic tweets more than doubled in the three-month period after Musk’s takeover at the app.

That’s quite a different chart to the one that Twitter has shared – so what’s that variance here, and why is ISD’s data showing a sustained rise where Twitter’s own numbers reflect a fall?

In some ways, you could say that the biggest spike in this chart reflects the same incident that Twitter’s data points to, which it claims is an increase in bot attacks designed to discredit Musk’s leadership by amplifying slurs in the app.

Indeed, according to ISD’s report:

“We also identified a surge in the creation of new accounts posting hate speech which correlated with Musk’s takeover. In total 3,855 accounts which posted at least one antisemitic Tweet were created between October 27 and November 6. This represents more than triple the rate of potentially hateful account creation for the equivalent period prior to the takeover.”

That likely aligns with Twitter’s detection of bots, while ISD does also note that Twitter is now removing more content:

“The proportion of antisemitic content removed by Twitter appears to have increased in the period since the takeover, with 12% of antisemitic tweets subsequently unavailable for collection, compared to 6% before the takeover. However this potential increase in removal rate has not kept pace with the increase in overall antisemitic content, with the result that hate speech remains more accessible on the platform than before Musk’s acquisition.”

ISD’s findings also correlate with similar data from The Center for Countering Digital Hate, which found that slurs against Black and transgender people significantly jumped shortly after Musk took over at the app, while engagement on hate speech also rose.

“The average number of likes, replies, and retweets on posts with slurs was 13.3 in the weeks leading up to Musk’s Twitter 2.0. Since the takeover, average engagements on hateful content has jumped to 49.5, according to the report.”

But again, these findings were in the early stages of the shift, which Twitter admits to. The question then is have things changed since – and how have they changed if Twitter is working to reduce restrictions on speech?

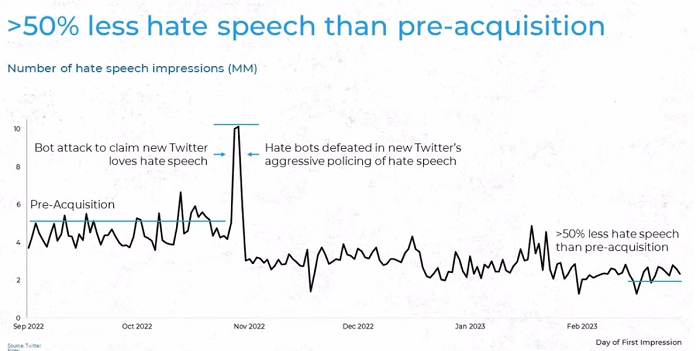

Data released by Twitter provides some additional context. Last month, Twitter published new insights from Sprinklr which shed more light on its efforts to curb hate speech, and how it’s calculating its figures.

As per Twitter:

“Sprinklr defines hate speech by evaluating slurs in the nuanced context of their use. Twitter has, to this point, taken a broader view of the potential toxicity of slur usage. To quantify hate speech, Twitter & Sprinklr start with 300 of the most common English-language slurs. We count not only how often they’re tweeted but how often they’re seen (impressions). Our models score slur Tweets on ‘toxicity’, the likelihood that they constitute hate speech.”

According to this methodology, most slur usage via tweet is actually not hate speech, with certain terms being used within certain communities in a way that requires more nuance in assessment than simple counting data. Terms used in the Black community for example, may not be viewed as hate speech on balance, but would be considered such if you were using keyword tracking.

Twitter claims that its tracking process factors in this consideration, where others do not, and when the usage of any such terminology is used in a hateful way, Twitter takes action to either remove the tweet, or restrict its reach.

“Sprinklr’s analysis found that hate speech receives 67% fewer impressions per Tweet than non-toxic slur Tweets. No model is ever perfect, and this work is never done. We’ll continue to combat hate speech by incorporating other languages, new terms, and more precise methodologies – all while increasing transparency.”

Essentially, Twitter says that counting all mentions of potential slurs, as per these external studies, is not an effective means to measure the impact of such, because it’s not the mentions themselves, but the context in which they’re used, and subsequently, the reach they get.

Without these considerations being factored into any assessment, it can’t be accurate – which would explain why Twitter’s data is so much different to the findings via third-party analysis.

Is that correct? Well, without the full comparative data before you, it’s hard to say, but the expanded assessment process does make sense, which could mean that more binary analysis of such terms is flawed, at least to some degree.

Still, Twitter is also facing billions in fines in Germany for failing to remove hate speech in a timely manner, as per local regulations, and it’ll be interesting to see exactly what specific examples German authorities provide in that case.

So there are still, seemingly, some concerns – but the expanded context that Twitter refers to, along with its increased efforts to restrict hate speech, does make some sense.

We’ll no doubt get more data on this as time goes on, but the overall picture does present a more nuanced picture of such than some findings may suggest.