LinkedIn has rolled out a new detection system to address policy-violating content in posts, which relies on AI detection to optimize its moderator workflow. Which, according to LinkedIn, has already led to significant reductions in exposure for users.

The new system now filters all potentially violative content through LinkedIn’s AI reader system, with the process then filtering each example based on priority.

As explained by LinkedIn:

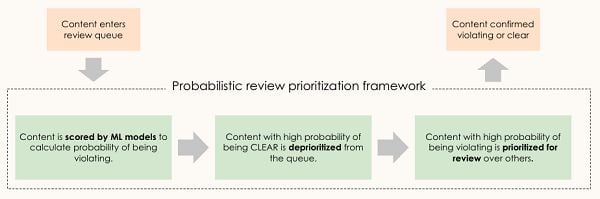

“With this framework, content entering review queues is scored by a set of AI models to calculate the probability that it likely violates our policies. Content with a high probability of being non-violative is deprioritized, saving human reviewer bandwidth, and content with a higher probability of being policy-violating is prioritized over others so it can be detected and removed quicker.”

Which is likely how you imagined such systems functioned already, in using a level of automation to determine severity. But according to LinkedIn, this new, more advanced AI process is better able to sort incidents, and ensure that the worst-case examples are addressed faster, by refining the workload of its human moderators.

Though a lot, then, is reliant on the accuracy of its automated detection systems, and its ability to determine whether posts are harmful or not.

For this, LinkedIn says that it’s using new models that are constantly updating themselves based on the latest examples.

“These models are trained on a representative sample of past human labeled data from the content review queue, and tested on another out-of-time sample. We leverage random grid search for hyperparameter selection and the final model is chosen based on the highest recall at extremely high precision. We use this success metric because LinkedIn has a very high bar for trust enforcements quality so it is important to maintain very high precision.”

LinkedIn says that its updated moderation flow is able to make auto-decisions on ~10% of all queued content at its established precision standard, “which is better than the performance of a typical human reviewer”.

“Due to these savings, we are able to reduce the burden on human reviewers, allowing them to focus on content that requires their review due to severity and ambiguity. With the dynamic prioritization of content in the review queue, this framework is also able to reduce the average time taken to catch policy-violating content by ~60%.”

It’s a good use of AI, though it may impact the content that eventually gets through, depending on how they system is able to remain updated and ensure that rule-violating posts are detected.

LinkedIn’s confident that it’ll improve the user experience, but it may be worth noting whether you see an improvement, and experience fewer rule-breaking posts in the app.

I mean, LinkedIn is less likely to see more incendiary posts than other apps, so it’s probably not like you’re seeing a heap of offensive content in your LinkedIn feed anyway. But still, this updated process should enable LinkedIn to better utilize its human moderation staff to maximize response by better prioritizing workflow in this respect.

And if it works, it could provide notes for other apps to improve their own detection flows.

You can read LinkedIn’s full moderation system overview here.