As we head into the holiday season, where teens will be spending more time online and engaging with friends, Meta has announced a new set of updates to better protect younger users from potential exposure to predators, and other harmful behaviors in the app.

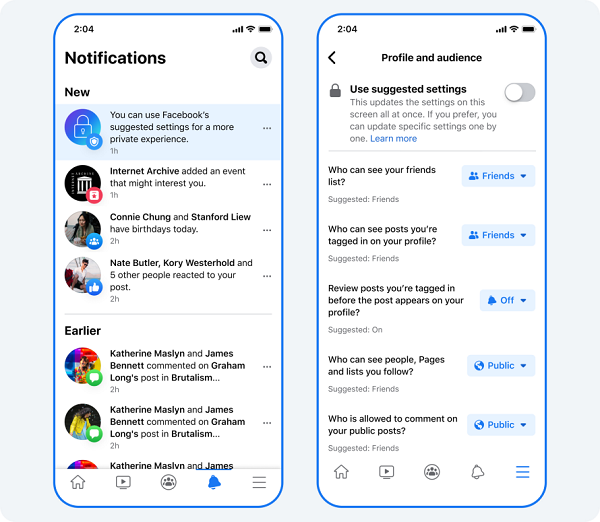

First off, Meta will now implement stricter privacy controls by default on Facebook for all users under the age of 16 that sign up for an account.

The more stringent privacy settings will limit who can see their friends list and the Pages that they follow in the app, while it will also hide posts that they’re tagged in, and stop non-connections from commenting on their public posts.

The measures will ensure that youngsters have a higher level of privacy in the app. And while they can still revert from the defaults, establishing this as the norm could go a long way toward ensuring younger users are at the least aware of these settings.

Meta implemented similar on Instagram in July last year, with users under the age of 16 now defaulted into private accounts, which means that non-connections can’t view or comment on their posts or Stories, while they’re also not displayed in Explore or searches.

Facebook’s new process doesn’t go quite as far, as user profiles will still be displayed in the app. But it’s essentially the same, in that it ensures that the only people who can see their content, or contact the user, are people who they’ve approved as connections.

I mean, kids will still approve questionable connections, it’s not a fool-proof process. But in combination with Meta’s parental control tools, the features go a long way toward providing much needed assurance and protection for young users.

In addition to this, Meta’s also testing a new process which will limit the capacity for kids to make contact with ‘suspicious’ adults in its apps.

As per Meta:

“A ‘suspicious’ account is one that belongs to an adult that may have recently been blocked or reported by a young person, for example.”

In such cases, Meta will now stop these users from being able to message young users, while it will also remove their accounts as suggestions in young users’ ‘People You May Know’ recommendations.

“As an extra layer of protection, we’re also testing removing the message button on teens’ Instagram accounts when they’re viewed by suspicious adults altogether.”

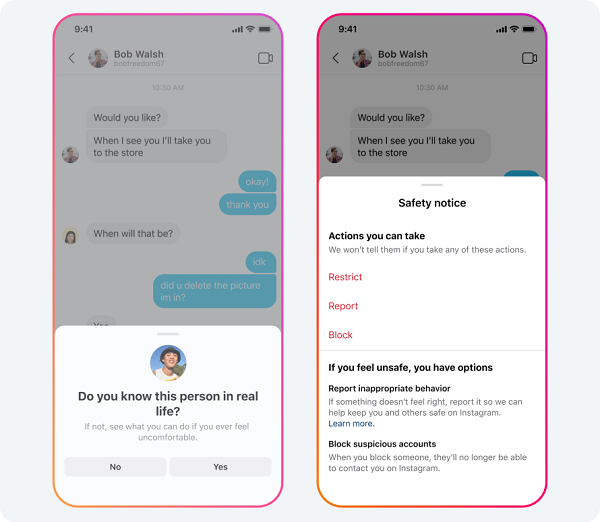

Meta’s also adding new notifications which will encourage young users to utilize its various protective tools and features as they use the app.

As you can see in these examples, Meta will now prompt users to switch on privacy features, while there’ll also be new alerts to report accounts after you block them in the app. Meta’s also adding new safety notices with information on how to, for example, deal with inappropriate messages from adults.

Finally, Meta’s also working with the National Center for Missing and Exploited Children (NCMEC) to expand its program to help teen users stop the misuse of their intimate images.

The process enables users to create an image ID for any of their intimate images, which can then be used to track instances of those pictures across the web. The user doesn’t upload their image to a database, but instead, the system scans the image on your device, then creates a unique hash value, which can then be used to scan for other versions of the same.

Meta launched an initial version of this process with a range of partners in Europe last year. It’s now expanding the system to more regions.

Child protection is a key area of focus for all social apps, but for Meta in particular, given the research into how Facebook and Instagram have been utilized by predators.

According to one report, over a quarter of 9 to 12-year-olds have experienced sexual solicitation online, with one in eight indicating that they’ve been asked to send a nude photo or video. And when you also consider that 45% of kids aged 9 to 12 use Facebook every day, despite its age limits, you start to get some sense of the issue at hand, and why Meta specifically needs to implement more restrictive, protective measures.

These measures are not, however, a solution. Because there isn’t one – kids that grow up online are increasingly savvy in regards to how to circumvent systems and avoid detection, and while to them, it’s a challenge, and a means to access areas of the web that they’re not supposed to, they’re also opening themselves up to significant risk in the process.

The best approach is through digital literacy education, and ensuring, as best you can, that you maintain an open dialogue with your kids about the risks and dangers of online connection. That’s not always possible, but through a combination of all of these tools, hopefully, that will provide additional protection and awareness.