This serves as a good reminder of the need to remain vigilant in policing social media misuse and manipulation, and improving education around the same.

Today, Meta has announced that it’s detected and removed two significant new influence operations, stemming from state-based actors in Russia and China, which had both sought to use Meta’s platforms to sway public opinions about the invasion of Ukraine, as well as other political subjects.

The main new network identified was based in Russia, and comprised of more than 1,600 Facebook accounts, and 700 Facebook Pages, which had sought to influence global opinion about the Ukraine conflict.

As per Meta:

“The operation began in May of this year and centered around a sprawling network of over 60 websites carefully impersonating legitimate websites of news organizations in Europe, including Spiegel, The Guardian and Bild. There, they would post original articles that criticized Ukraine and Ukrainian refugees, supported Russia and argued that Western sanctions on Russia would backfire.”

As you can see in this example, the group created closely modeled copies of well-known news websites to push their agenda.

The group then promoted these posts across Facebook, Instagram, Telegram and Twitter, while also, interestingly, using petition websites like Change.org to expand their messaging.

“On a few occasions, the operation’s content was amplified by the Facebook Pages of Russian embassies in Europe and Asia.”

Meta says that this is the largest and most complex Russian-origin operation that it’s disrupted since the beginning of the war in Ukraine, while it also presents ‘an unusual combination of sophistication and brute force’.

Which is a concern. Manipulation efforts like this are always evolving, but the fact that this one replicated well-known news websites, and likely convinced a lot of people with such, underlines the need for ongoing vigilance.

It also highlights the need for digital literacy training, which should become part of the educational curriculum in all regions.

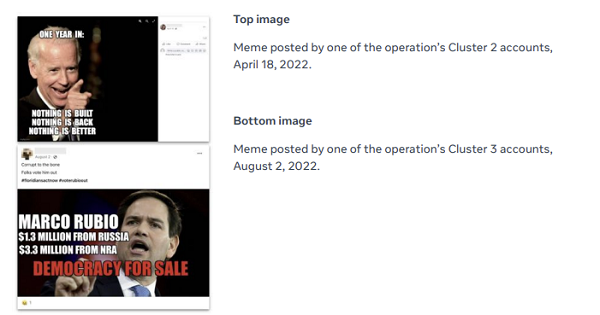

The second network detected originated from China, and also sought to influence public opinion around US domestic politics and foreign policy towards China and Ukraine.

The China-based cluster was much smaller (comprising 81 Facebook accounts), but once again provides an example of how political activists are looking to use social media’s influence and algorithms to manipulate the public, in increasingly advanced ways.

For Russia, in particular, social media has become a key weapon, with various groups already detected and removed by Meta throughout the year.

- In February, Meta removed a Russia-originated network which had been posing as news editors from Kyiv, and publishing claims about the West ‘betraying Ukraine and Ukraine being a failed state’.

- In Q1, Meta also removed a network of around 200 accounts operated from Russia which had been coordinating to falsely report people for various violations, primarily targeting Ukranian users.

- Meta has also detected activity linked to the Belarusian KGB, which had been posting in Polish and English about Ukrainian troops surrendering without a fight, and the nation’s leaders fleeing the country.

- Meta’s also been tracking activity linked to accounts formerly linked to the Russian Internet Research Agency (IRA), which had been the primary team that promoted misinformation in the lead-up to the 2016 US election, as well as attacks by ‘Ghostwriter’, a group which has been targeting Ukrainian military personnel, in an attempt to gain access to their social media accounts.

- In Q2, Meta reported that it had detected a network of more than 1,000 Instagram accounts operating out of St Petersburg which had also been looking to promote pro-Russia perspectives on the Ukraine invasion

Indeed, after seeing success in swaying online discussion back in 2016, Russia clearly views social media as a key avenue for winning support, and/or sparking dissent, which underlines, yet again, why the platforms need to remain vigilant in ensuring that they are not being used for such purpose.

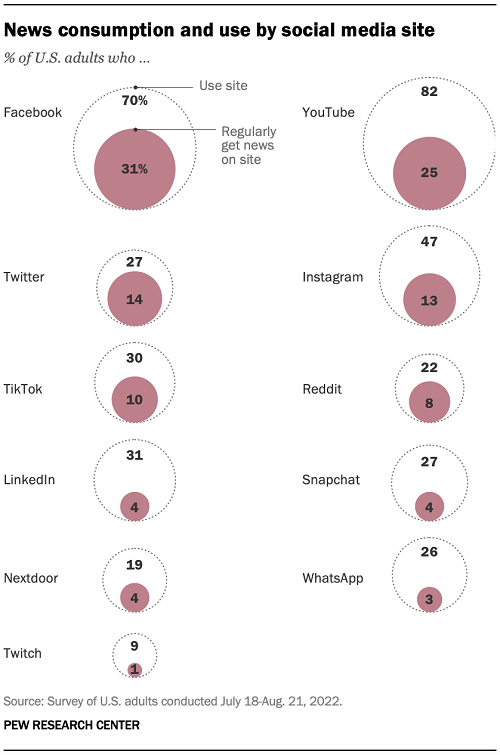

Because the reality is that social media platforms are not harmless, they’re not just fun, time-wasting websites where you go to catch up on the latest from friends and family. Increasingly, they’ve become key connective tools, in many ways – with the most recent data from Pew Research showing that 31% of Americans now regularly get news content from Facebook.

And Facebook’s influence in this regard is likely more significant than that, with news and opinions shared by the people that you know and trust likely also having an impact, in some way, on your own thoughts and considerations.

That’s where Facebook’s true power lies, in showing you what the people you trust the most think about the latest news stories. Which also seems to now be what’s driving users away, with many seemingly fed up with the constant flood of political content in the app, which is now driving more people to other, more entertainment-focused platforms instead.

That’s been a concern for some time – in Meta’s Q4 2020 earnings announcement, CEO Mark Zuckerberg noted that:

“One of the top pieces of feedback we’re hearing from our community right now is that people don’t want politics and fighting to take over their experience on our services. So one theme for this year is that we’re going to continue to focus on helping millions more people participate in healthy communities and we’re going to focus even more on being a force for bringing people closer together.”

Whether that’s worked is not clear, but Meta’s still working to put more focus on entertainment and lighter content in the main News Feed, in order to dilute the impact of divisive political opinions.

Which could also reduce the capacity for coordinated efforts by state-based actors like this to succeed – but right now, Facebook remains a powerful platform for influence in this respect, especially given its algorithmic amplification of posts that generate more comments and debate.

More divisive, incendiary posts trigger more response, which then amplifies their reach across The Social Network. Given this, you can see how Facebook has inadvertently provided the perfect stage for these efforts, with the reach and resonance to push them out to more communities.

As such, it’s good that Meta has upped its efforts to detect these pushes, but it also serves as a reminder as to how the platform can be used by such groups, and why it’s such a threat to democracy.

Because really, we don’t know if we’re being influenced. One recent report, for example, suggested that the Chinese Government has played a role in helping TikTok develop algorithms that promote harmful, dangerous and anti-social trends in the app, in order to sow discord and disorder among western youth.

The algorithm in the Chinese version of the app, Douyin, promotes positive behaviors, as defined by the CCP, in order to better incentivize achievement and support around such for Chinese youngsters.

Is that another form of social media manipulation? Should that also be factored into any such investigations around such?

These latest findings show that this remains a significant threat, even if it seems like such efforts have been reduced over time.