Meta has announced some changes to its policies relating to housing, employment and credit ads, as a result of a recent settlement with the US Department of Housing and Urban Development related to the potential for discriminatory use for Facebook ads within these categories.

The settlement specifically relates to housing ads, and the way that Facebook ads can be used to target specific groups with housing promotions, which could be used to exclude certain audiences from that outreach.

These new changes will, ideally, eliminate that possibility, while Meta has also chosen to expand the same to employment and credit ads as well.

As per Meta:

“To protect against discrimination, advertisers running housing ads on our platforms already have a limited number of targeting options they can choose from while setting up their campaigns, including a restriction on using age, gender or ZIP code. Our new method builds on that foundation, and strives to make additional progress toward a more equitable distribution of ads through our ad delivery process.”

That updated method includes a new “variance reduction system” within Meta’s ad targeting process, which is designed to correct for potential errors in this process.

Mets says that it’s been working with the Department of Housing for more than a year on the new system, which will expand the use of machine learning technology to ensure the age, gender and estimated race or ethnicity of a housing ad’s overall audience matches the demographic and ethnicity mix of the population eligible to see that ad.

“We’re making this change in part to address feedback we’ve heard from civil rights groups, policymakers and regulators about how our ad system delivers certain categories of personalized ads, especially when it comes to fairness. So while HUD raised concerns about personalized housing ads specifically, we also plan to use this method for ads related to employment and credit. Discrimination in housing, employment and credit is a deep-rooted problem with a long history in the US, and we are committed to broadening opportunities for marginalized communities in these spaces and others.”

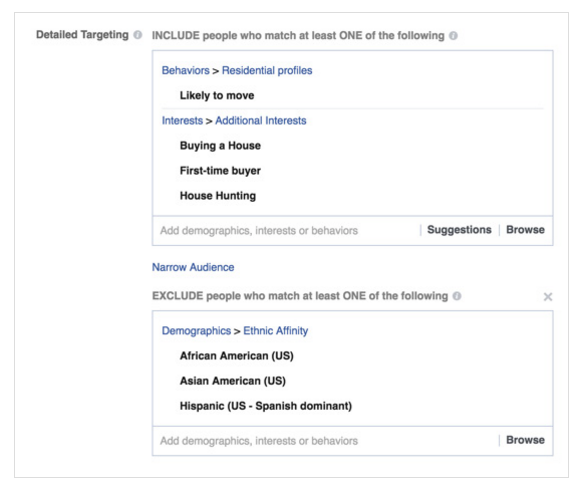

Meta’s advanced ad targeting systems have come under much scrutiny over this element, with an investigation by ProPublica in 2016, finding that Facebook’s system enabled advertisers to exclude black, Hispanic, and other “ethnic affinities” from seeing ads.

As Meta notes, it’s since implemented various changes to limit misuse of its ads, but many have argued that it hasn’t gone far enough, with advertisers still able to exclude certain audiences using Meta’s advanced tools.

This new initiative will aim to provide more protections, and remove bias, in partnership with key organizations.

In addition, Meta also says that it’s sunsetting its Special Ad Audiences tool:

“In 2019, in addition to eliminating certain targeting options for housing, employment and credit ads, we introduced Special Ad Audiences as an alternative to Lookalike Audiences. But the field of fairness in machine learning is a dynamic and evolving one, and Special Ad Audiences was an early way to address concerns. Now, our focus will move to new approaches to improve fairness, including the method we announced today.”

Given the focus of these changes, the impact should be minimal, as they’re designed to stamp out types of targeting that shouldn’t be used anyway. But there may be flow-on effects, and if you’re running ads in these areas, or via these tools, it’ll be worth taking note of the changes within your process.

Meta says that given the complexities involved in the process, these changes ‘will take some time to test and implement’.