As part of its ongoing efforts to protect young users in its apps, Meta has today announced that it’s signed on to become a founding member of a new initiative called “Project Lantern” which will see various online platforms working together to track and respond to incidents of child abuse.

Overseen by the Tech Coalition, Project Lantern will facilitate cross-platform data sharing, in order to stop predators from simply halting their activity on one app, when detected, and starting up in another.

As per Meta:

“Predators don’t limit their attempts to harm children to individual platforms. They use multiple apps and websites and adapt their tactics across them all to avoid detection. When a predator is discovered and removed from a site for breaking its rules, they may head to one of the many other apps or websites they use to target children.”

Project Lantern, which is also being launched with Discord, Google, Mega, Quora, Roblox, Snap, and Twitch among its participating partners, will provide a centralized platform for reporting and sharing information to stamp out such activity.

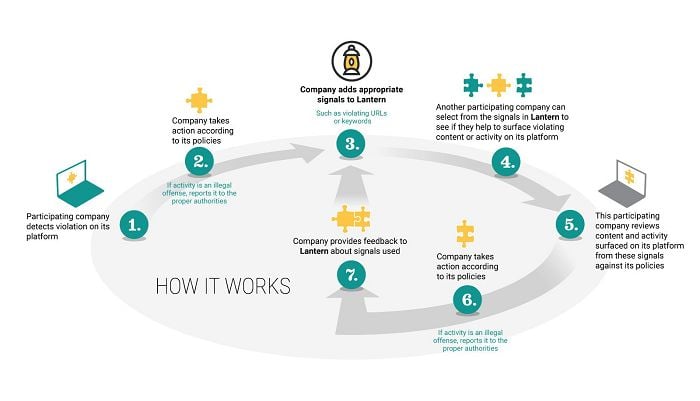

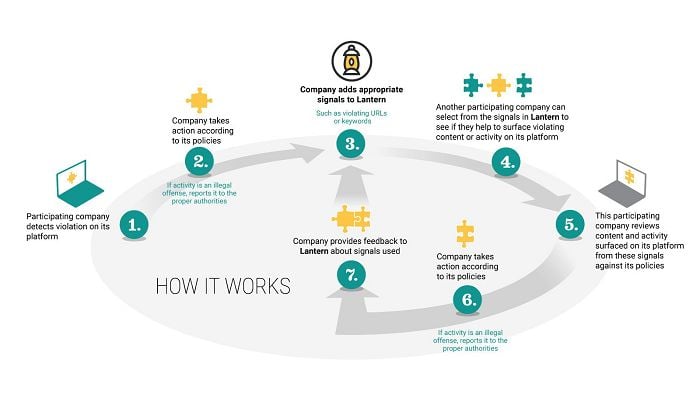

As you can see in this diagram, the Lantern program will enable tech platforms to share a variety of signals about accounts and behaviors that violate their child safety policies. Lantern participants will then be able to use this information to conduct investigations on their own platforms and take action, which will then also be uploaded to the Lantern database.

It’s an important initiative, which could have a significant impact, while it’ll also extend Meta’s broader partnerships push to improve collective detection and removal of harmful content, including coordinated misinformation online.

Though at the same time, Meta’s own internal processes around protecting teen users have been brought into question once again.

This week, former Meta engineer Arturo Béjar fronted a Senate judiciary subcommittee to share his concerns the dangers of exposure on Facebook and Instagram.

As per Béjar:

“The amount of harmful experiences that 13- to 15-year olds have on social media is really significant. If you knew, for example, at the school you were going to send your kids to, that the rates of bullying and harassment or unwanted sexual advances were what [Meta currently sees], I don’t think that you would send your kids to the school.”

Béjar, who worked on cyberbullying countermeasures for Meta between 2009 and 2015, is speaking from direct experience, after his own teenage daughter experienced unwanted sexual advances and harassment on IG.

“It’s time that the public and parents understand the true level of harm posed by these ‘products’ and it’s time that young users have the tools to report and suppress online abuse.”

Béjar is calling for tighter regulation of social platforms in regards to teen safety, noting that Meta executives are well aware of such concerns, but choose not to address them due to fears of harming user growth, among other potential impacts.

Though it may soon have to, with U.S. Congress considering new legislation that would require social media platforms to provide parents with more tools to protect children online.

Meta already has a range of tools on this front, but Béjar says that Meta could do more in terms of the design of its apps, and the accessibility of such tools in-stream.

It’s another element that Meta will need to address, which could also, in some ways, be linked to this new Lantern Project, in providing more insight into how such incidents occur across platforms, and what are the best approaches to stop such.

But the bottom line is that this remains a major concern, for all social apps. And as such, any effort to improve detection and enforcement is a worthy investment.

You can read more about Project Lantern here.