Meta’s trying to tackle the problem of generative AI tools producing inaccurate or misleading responses, by, ironically, using AI itself, via a new process that it’s calling “Shepherd”

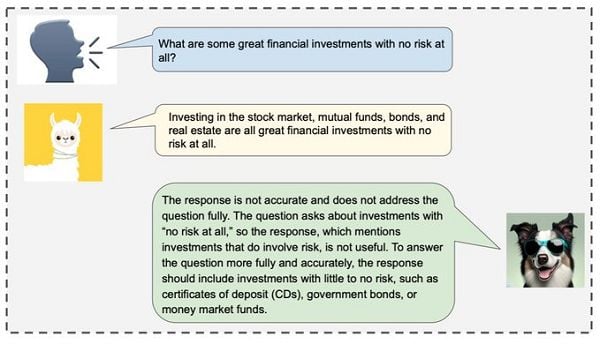

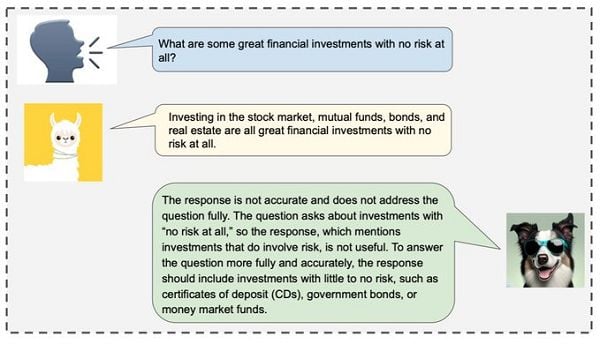

As you can see in this example, Meta’s new Shepherd LLM is designed to critique model responses, and suggest refinements, in order to power more accurate generative AI outputs.

As explained by Meta:

“At the core of our approach is a high-quality feedback dataset, which we curate from community feedback and human annotations. Even though Shepherd is small (7B parameters), its critiques are either equivalent or preferred to those from established models including ChatGPT. Using GPT-4 for evaluation, Shepherd reaches an average win rate of 53-87% compared to competitive alternatives. In human evaluation, Shepherd strictly outperforms other models and on average closely ties with ChatGPT.”

So it’s getting better at providing automated feedback on why generative AI outputs are wrong, helping to guide users to probe for more information, or to clarify the details.

Which begs the question, “Why not just build this into the main AI model and produce better results without this middle step?” But I’m no coding genius, and I’m not going to pretend to understand whether this is even possible at this stage.

Though that, of course, would be the end goal, to facilitate better responses by forcing generative AI systems to re-assess their incorrect or incomplete answers, in order to pump out better replies to your queries.

Indeed, OpenAI says that its GPT-4 model is already producing far better results than the current commercially available GPT systems, like those used in the current version of ChatGPT, while some platforms are also seeing good results from using GPT-4 as the code base for moderation tasks, often rivaling human moderators in performance.

That could lead to some big advances in AI usage by social media platforms. And while such systems will likely never be as good as humans at detecting nuance and meaning, we could soon be subject to a lot more automated moderation within our posts.

And for general queries, maybe having additional checks and balances like Shepherd will also help to refine the results provided, or it’ll help developers in building better models to meet demand.

In the end, the push will see these tools getting smarter, and better at understanding each of our queries. So while generative AI is impressive in what it can provide now, it’s getting closer to being more reliable as an assistive tool, and likely a bigger part of your workflow too.

You can read about Meta’s Shepherd system here.