Meta has announced a new initiative designed to establish agreed parameters around cybersecurity considerations in the development large language models (LLMs) and generative AI tools, which it’s hoping will be adopted by the broader industry, as a key step towards facilitating greater AI security.

Called “Purple Llama”, based on its own Llama LLM, the project aims to “bring together tools and evaluations to help the community build responsibly with open generative AI models”

According to Meta, the Purple Llama project aims to establish the first industry-wide set of cybersecurity safety evaluations for LLMs.

As per Meta:

“These benchmarks are based on industry guidance and standards (e.g., CWE and MITRE ATT&CK) and built in collaboration with our security subject matter experts. With this initial release, we aim to provide tools that will help address a range of risks outlined in the White House commitments on developing responsible AI”

The White House’s recent AI safety directive urges developers to establish standards and tests to ensure that AI systems are secure, to protect users from AI-based manipulation, and other considerations that can ideally stop AI systems from taking over the world.

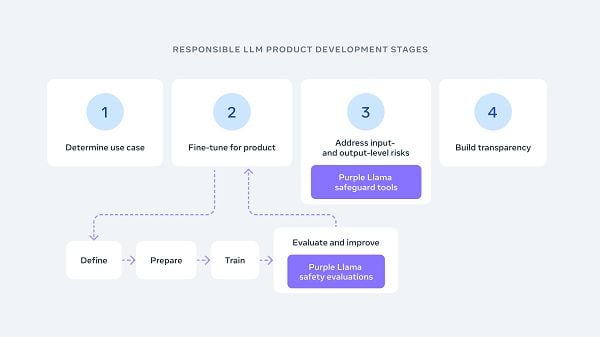

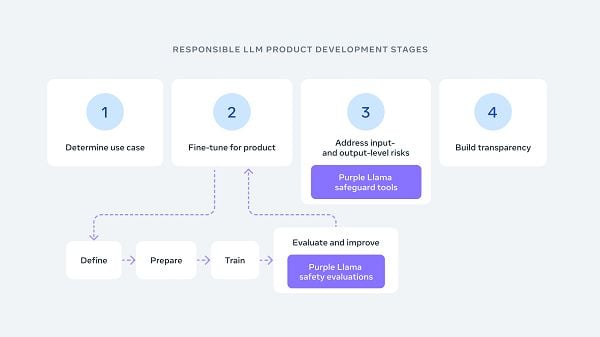

Which are the driving parameters for Meta’s Purple Llama project, which will initially include two key elements:

- CyberSec Eval – Industry-agreed cybersecurity safety evaluation benchmarks for LLMs

- Llama Guard – A framework for protecting against potentially risky AI outputs.

“We believe these tools will reduce the frequency of LLMs suggesting insecure AI-generated code and reduce their helpfulness to cyber adversaries. Our initial results show that there are meaningful cybersecurity risks for LLMs, both with recommending insecure code and for complying with malicious requests.”

The Purple Llama will partner with members of the newly-formed AI Alliance which Meta is helping to lead, and also includes Microsoft, AWS, Nvidia, and Google Cloud as founding partners.

So what’s “purple” got to do with it? I could explain, but it’s pretty nerdy, and as soon as you read it you’ll regret having that knowledge take up space inside your head.

AI safety is fast becoming a critical consideration, as generative AI models evolve at rapid speed, and experts warn of the dangers in building systems that could potentially “think” for themselves.

That’s long been a fear of sci-fi tragics and AI doomers, that one day, we’ll create machines that can outthink our simply human brains, effectively making humans obsolete, and establishing a new dominant species on the planet.

We’re a long way from this being a reality, but as AI tools advance, those fears also grow, and if we don’t fully understand the extent of possible outputs from such processes, there could indeed be significant problems stemming from AI development.

The counter to that is that even if U.S. developers slow their progress, that doesn’t mean that researchers in other markets will follow the same rules. And if Western governments impede progress, that could also become an existential threat, as potential military rivals build more advanced AI systems.

The answer, then, seems to be greater industry collaboration on safety measures and rules, which will then ensure that all the relevant risks are being assessed and factored in.

Meta’s Purple Llama project is another step on this path.

You can read more about the Purple Llama initiative here.