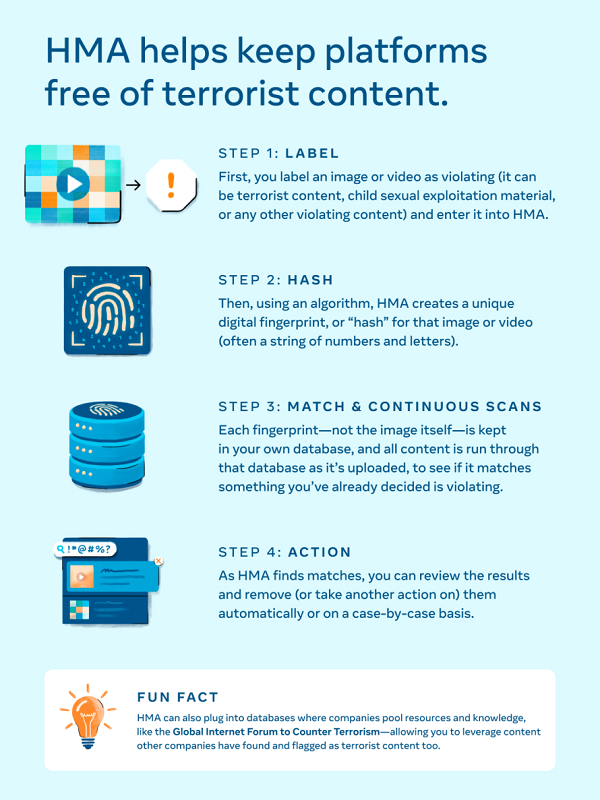

Meta’s looking to help expand broader industry efforts in the detection and removal of terrorism-related content, with the release of its Hasher-Matcher-Actioner (HMA) tool, which identifies copies of images or videos online, enabling better detection without making copies of the source material itself.

As you can see in this overview, the goal of Meta’s HMA system is to help identify offensive content without re-distributing it for this purpose, effectively limiting instances of such material while still enabling detection.

The announcement of the broader launch of HMA comes as Meta also prepares to take on the chair role for the Global Internet Forum to Counter Terrorism (GIFCT)’s Operating Board, which is a collection of tech companies that have banded together to tackle terrorist content online, through research, technical collaboration and knowledge sharing.

Meta’s hoping to use its time in the role to expand collaboration, through the use of tools like HMA.

As explained by Meta:

“The more companies participate in the hash sharing database the better and more comprehensive it is — and the better we all are at keeping terrorist content off the internet, especially since people will often move from one platform to another to share this content.”

Meta says that it’s made big advances in the detection and removal of harmful content, with terror-related material, in particular, seeing far less reach across Facebook and Instagram. Its messaging apps, however, are also moving towards full encryption by default, which could facilitate broader spread of the same, in an undetectable way, but Meta’s continued work will ideally help to combat such on all fronts, where possible, while also aligning with evolving consumer privacy expectations.

It’s a critical area for all social platforms, and while some are now softening their approach on some forms of potential hate speech, overall, the lessons learned over time are seemingly helping to address such issues, and limit the spread of dangerous movements.