The US Midterms are coming up, and Meta has today outlined how it’s working to combat the spread of political misinformation within its apps, via a range of measures and awareness tools designed to help users make more informed choices about their votes in the upcoming polls.

As explained by Meta:

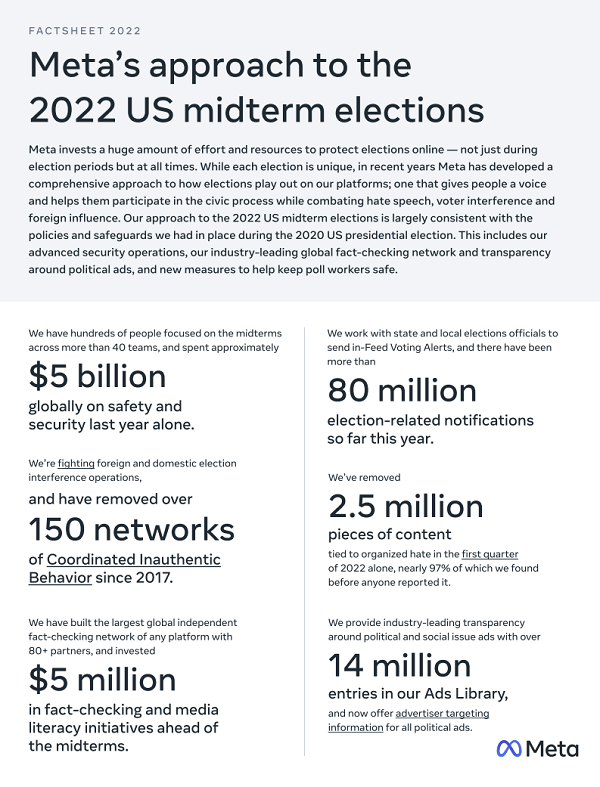

“Meta invests a huge amount to protect elections online – not just during election periods but at all times. We spent approximately $5 billion on safety and security last year alone, and have hundreds of people dedicated to this work permanently embedded across more than 40 teams. With each major election around the world – including national elections this year in France and the Philippines – we incorporate the lessons we learn to help stay ahead of emerging threats.”

Meta says that its approach to the 2022 US Midterms will incorporate learnings from its past efforts, and will entail a range of elements, including:

- Warning labels on fact-checked posts to help guide users to credible information around campaign claims

- An updated political ads Spend Tracker in its Ad Library Report, in order to help people understand how much specific candidates and political parties are spending to reach voters

- The prohibition of new political, electoral and social issue ads during the final week of the election campaign (the same as it implemented in the 2020 Presidential Election)

- Proactive efforts to remove misinformation about voting, as well as calls for violence related to voting, voter registration, or the administration or outcome of an election

- New voting participation stickers on Facebook and Instagram to encourage civic participation

Meta will also seek to highlight critical information about voter registration and state-based election updates.

“State and local election officials continue to use our Voting Alerts on Facebook to send the latest information about registering and voting to people in their communities, and there have been more than 80 million election-related notifications so far this year. We’re also elevating post Comments from local elections officials to ensure people have as much reliable information as possible about how, when and where to vote.”

And for the first time, Meta will also display election-related in-Feed notifications in a second language other than English, if it thinks the second language may be better understood.

“For example, if a person has their language set to English but is interacting with a majority of content in Spanish, then we will show the voting notifications in both English and Spanish.”

The measures will build on Meta’s existing fact-checking and misinformation tools to help solidify its approach to election integrity, and facilitate more accurate, informed discussion within its apps.

Meta has also published a new fact sheet outlining its various efforts on this front.

On another front, Meta also says that it’s taking stronger action on militant groups that seek to mobilize support via its platforms.

“We’ve banned more than 270 white supremacist organizations, and removed 2.5 million pieces of content tied to organized hate globally in the first quarter of 2022. Of the content we removed, nearly 97% of it was found by our systems before someone reported it.”

That’s become a key concern in the US, with the Capitol riots underlining the deep divides in American political discourse, which have been further worsened recently by the FBI raids on the home of former President Donald Trump.

Indeed, in the wake of the Mar-a-Lago raids, tensions are once again rising, with some suggesting that a civil war is brewing due to discontent over ideological viewpoints, which also links back to the Supreme Court’s recent ruling on abortion, controversies surrounding border protection, COVID mitigation measures, etc.

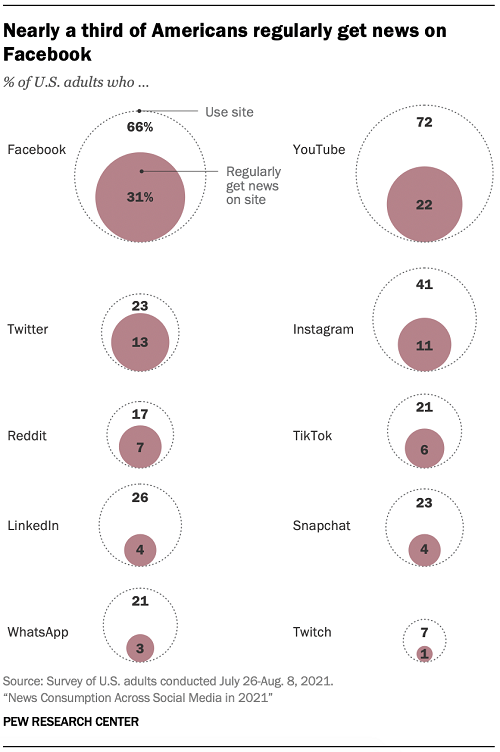

It’s an increasingly delicate situation, and no matter how you look at it, Facebook now plays a key role in the dissemination of news and information, with a third of US adults regularly accessing news content in the app.

Which is why Meta needs to take measures to ensure that it’s not amplifying false or misleading information.

Of course, critics would say that Meta should stay out of it, and let the people share the information that they deem to be accurate. But that’s simply not tenable, due to the incentives that digital platforms have inadvertently built into news coverage, which now unfairly skew outlets towards more divisive, partisan takes, as a means to maximize engagement.

Because what gets the most engagement? Content that sparks emotion, with ‘anger’ and ‘joy’ being the most effective triggers for prompting viral sharing.

As such, in order for news outlets to play into online algorithms, and maximize exposure for their websites, they need to focus on these elements, which is why you see so many extreme, almost absurd takes from media commentators every day – because they know that these comments will get people talking, thereby boosting their CTR, and making them more money from ads.

It’s often not ideology, it’s business, and it makes businesses sense, in the current landscape, to be provocative, to poke people based on their beliefs, and get them to react, in the form of more engagement.

But as we’ve seen, the flow-on impacts of that can be significant, leading to unjust decisions, flawed institutional responses, and actual, real world violence as a result of such trends.

It’s not just words, these are not just opinions that people can just shrug off as such so easily. Enabling the amplification of these movements can lead to significant harm, which is why Meta, and all social platforms, need to take responsibility for their role in such, through assessment of how they may be unfairly influencing political opinions.

You can check out Meta’s full midterms fact sheet here.