With the use of generative AI on the rise, Meta’s working to establish new rules around AI disclosure in its apps, which will not only put more onus on users to declare the use of AI in their content, but also, ideally, implement new systems to detect AI usage, via technical means.

Which is not always going to be possible, as most digital watermarking options are easily subverted. But ideally, Meta’s hoping to enact new industry standards around AI detection, by working in partnership with other providers to improve AI transparency, and establish new working rules to highlight such in-stream.

As explained by Meta:

“We’re building industry-leading tools that can identify invisible markers at scale – specifically, the “AI generated” information in the C2PA and IPTC technical standards – so we can label images from Google, OpenAI, Microsoft, Adobe, Midjourney, and Shutterstock as they implement their plans for adding metadata to images created by their tools.”

These technical detection measures will ideally enable Meta, and other platforms, to label content created with generative AI wherever it appears, so that all users are better informed about synthetic content.

That’ll help to reduce the spread of misinformation as a result of AI, though there are limitations on this capacity within the current AI landscape.

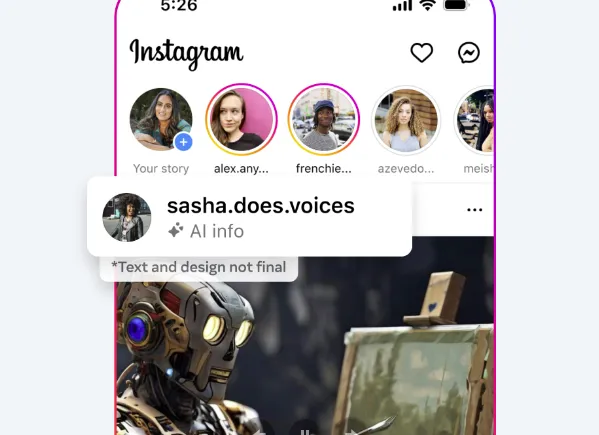

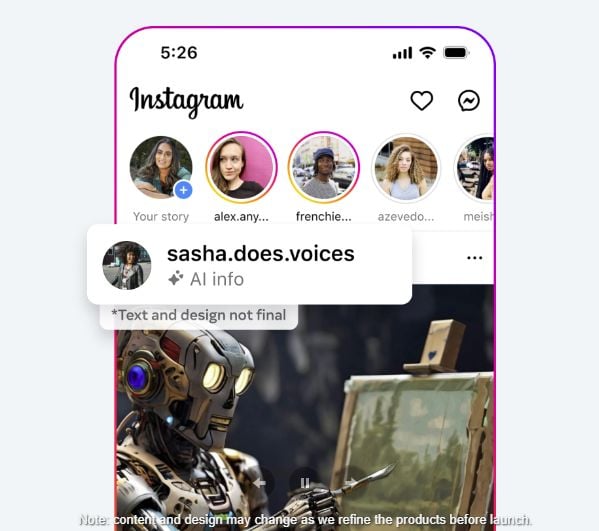

“While companies are starting to include signals in their image generators, they haven’t started including them in AI tools that generate audio and video at the same scale, so we can’t yet detect those signals and label this content from other companies. While the industry works towards this capability, we’re adding a feature for people to disclose when they share AI-generated video or audio so we can add a label to it. We’ll require people to use this disclosure and label tool when they post organic content with a photorealistic video or realistic-sounding audio that was digitally created or altered, and we may apply penalties if they fail to do so.”

Which is a key concern within AI development more broadly, and something that Google, in particular, has repeatedly sounded the alarm about.

While the development of new generative AI tools like ChatGPT are a major leap for the technology, Google’s view is that we should be taking a more cautious approach in releasing such to the public, due to the risk of harm associated with misuse.

Already, we’ve seen generative AI images cause confusion, from more innocent examples like The Pope in a puffer jacket, to more serious, like the aftermath of a fake explosion outside The Pentagon. Unlabeled and unconfirmed, it’s very hard to tell what’s true and what’s not, and while the broader internet has debunked these examples fairly rapidly, you can see how, in certain contexts, like, say, elections, the incentives of both sides could make this more problematic.

Image labeling will improve this, and again, Meta says that it is developing digital watermarking options that will be harder to side-step. But as it also notes, audio and video AI is not detectable as yet.

And we’ve already seen this in use by political campaigns:

Which is why some AI experts have repeatedly raised concerns, and it does seem somewhat problematic that we’re implementing safeguards for such in retrospect, after they’ve been put in the hands of the public.

Surely, as Google suggests, we should be developing these tools and systems first, then looking at deployment.

But as with all technological shifts, most regulation will come in retrospect. Indeed, the U.S. Government has started convening working groups on AI regulation, which has set the wheels in motion on an eventual framework for improved management.

Which will take years, and with a range of important elections being held around the world in 2024, it does seem like the chicken and egg of this situation has been confused.

But we can’t stop progress, because if the U.S. slows down, China won’t, and Western nations could end up falling behind. So we need to push ahead, which will open up all kinds of security loopholes in the coming election period.

And you can bet that AI is going to play a part in the U.S. Presidential race.

Maybe, in future, Meta’s efforts, combined with other tech giants and lawmakers, will facilitate more safeguards, and it is good that critical work is now being done on this front.

But it’s also concerning that we’re trying to re-cork a genie that’s already long been unleashed.