Meta has today showcased two new generative AI projects, that will eventually enable Facebook and Instagram users to create videos from text prompts, and facilitate customized edits of images in-stream, which could have a range of valuable applications.

Both projects are based on Meta’s “Emu” AI research project, which explores new ways to use generative AI prompts for visual projects.

The first is called “Emu Video”, which will enable you to create short video clips, based on text prompts.

1️⃣ Emu Video

This new text-to-video model leverages our Emu image generation model and can respond to text-only, image-only or combined text & image inputs to generate high quality video.Details ➡️ https://t.co/88rMeonxup

It uses a factorized approach that not only allows us… pic.twitter.com/VBPKn1j1OO

— AI at Meta (@AIatMeta) November 16, 2023

As you can see in these examples, EMU Video will be able to create high-quality video clips, based on simple text or still image inputs.

As explained by Meta:

“This is a unified architecture for video generation tasks that can respond to a variety of inputs: text only, image only, and both text and image. We’ve split the process into two steps: first, generating images conditioned on a text prompt, and then generating video conditioned on both the text and the generated image. This “factorized” or split approach to video generation lets us train video generation models efficiently.”

So, if you wanted, you’d be able to create video clips based on, say, a product photo and a text prompt, which could facilitate a range of new creative options for brands.

Emu Video will be able to generate 512×512, four-second long videos, running at 16 frames per second, which look pretty impressive, much more so than Meta’s previous text-to-video creation process that it previewed last year.

“In human evaluations, our video generations are strongly preferred compared to prior work – in fact, this model was preferred over [Meta’s previous generative video project] by 96% of respondents based on quality and by 85% of respondents based on faithfulness to the text prompt. Finally, the same model can “animate” user-provided images based on a text prompt where it once again sets a new state-of-the-art outperforming prior work by a significant margin.”

It’s an impressive-looking tool, which, again, could have a range of uses, dependent on whether it performs just as well in real application. But it looks promising, which could be a big step for Meta’s generative AI tools.

Also worth noting: That little watermark in the bottom left of each clip, which is Meta’s new “AI-generated” tag. Meta’s working on a range of tools to signify AI-generated content, including embedded digital watermarks on synthetic content. Many of these are still able to be edited out, but that’ll be hard to do that with video clips.

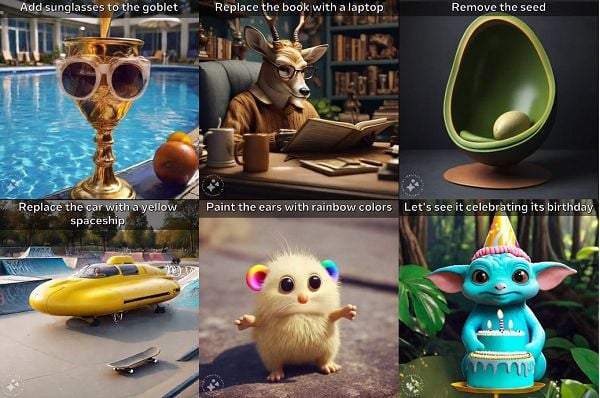

Meta’s second new element is called “Emu Edit”, which will enable users to facilitate custom, specific edits within visuals.

2️⃣ Emu Edit

This new model is capable of free-form editing through text instructions. Emu Edit precisely follows instructions and ensures only specified elements of the input image are edited while leaving areas unrelated to instruction untouched. This enables more powerful… pic.twitter.com/ECWF7qfWYY— AI at Meta (@AIatMeta) November 16, 2023

The most interesting aspect of this project is that it works based on conversational prompts, so you won’t need to highlight the part of the image you want to edit (like the drinks), you’ll just ask it to edit that element, and the system will understand which part of the visual you’re referring to.

Which could be a big help in editing AI visuals, and creating more customized variations, based on exactly what you need.

The possibilities of both projects are significant, and they could provide a heap of potential for creators and brands to use generative AI in all new ways.

Meta hasn’t said when these new tools will be available in its apps, but both look set to be coming soon, which will enable new creative opportunities, in a range of ways.

You can read about Meta’s new EMU experiments here and here.