Meta’s looking to fuel the development of the next stage of translation tools, with the release of its new SeamlessM4T multilingual AI translation model, which it says represents a significant advance in speech and text translation, across almost 100 different languages.

Introducing SeamlessM4T, the first all-in-one, multilingual multimodal translation model.

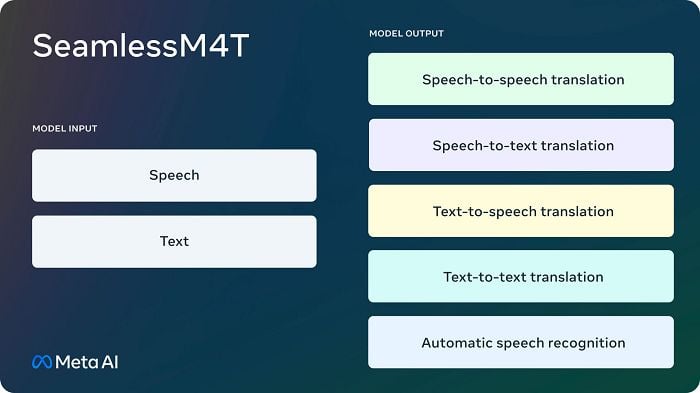

This single model can perform tasks across speech-to-text, speech-to-speech, text-to-text translation & speech recognition for up to 100 languages depending on the task.

Details ⬇️

— Meta AI (@MetaAI) August 22, 2023

As shown in the above example, Meta’s SeamlessM4T model is able to understand both speech and text inputs, and translate into both formats, all in one system, which could eventually enable more advanced communication tools to assist with multi-lingual interactions.

As explained by Meta:

“Building a universal language translator, like the fictional Babel Fish in The Hitchhiker’s Guide to the Galaxy, is challenging because existing speech-to-speech and speech-to-text systems only cover a small fraction of the world’s languages. But we believe the work we’re announcing today is a significant step forward in this journey. Compared to approaches using separate models, SeamlessM4T’s single system approach reduces errors and delays, increasing the efficiency and quality of the translation process. This enables people who speak different languages to communicate with each other more effectively.”

As Meta notes, the hope is that the new process will help to facilitate sci-fi-like real-time translation tools, which could soon be an actual reality, enabling broader communication between people around the world.

The expansion of this, then, would be translated text on a heads-up display within AR glasses, which Meta is also developing. More advanced AR functionality obviously expands beyond this, but a real-time universal translator, built into a visual overlay, could be a major step forward for communications, especially if, as expected, AR glasses do eventually become a bigger consideration.

Apple and Google are also looking to build the same, with Apple’s VisionPro team developing real-time translation tools for its upcoming headset device, and Google providing similar via its Pixel earbuds.

With advances like the SeamlessM4T model being built into such systems, or at least, advancing the development of similar tools, we could indeed be moving closer to a time where language is no longer a barrier to interaction.

“SeamlessM4T achieves state-of-the-art results for nearly 100 languages and multitask support across automatic speech recognition, speech-to-text, speech-to-speech, text-to-speech, and text-to-text translation, all in a single model. We also significantly improve performance for low and mid-resource languages supported and maintain strong performance on high-resource languages.”

Meta’s now publicly releasing the SeamlessM4T model in order to allow external developers to build on the initial framework.

Meta’s also releasing the metadata of SeamlessAlign, which it says is the biggest open multimodal translation dataset to date, with over 270,000 hours of mined speech and text alignments.

It’s a significant development, which could have a range of valuable uses, and marks another step towards the creation of functional, valuable digital assistants, which could make Meta’s coming wearables a more attractive product.

You can read more about Meta’s SeamlessM4T system here.