Among the various use cases for the new slate of large language models (LLMs), and generative AI based on such inputs, code generation is probably one of the most valuable and viable considerations.

Code creation has definitive answers, and existing parameters that can be used to achieve what you want. And while coding knowledge is key to creating effective, functional systems, basic memory also plays a big part, or at least knowing where to look to find relevant code examples to merge into the mix.

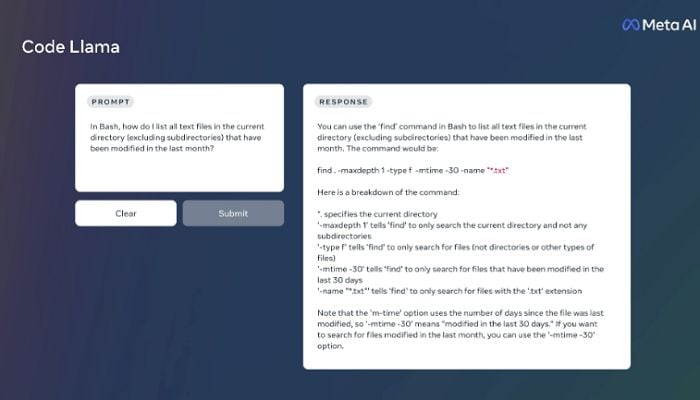

Which is why this could be significant. Today, Meta’s launching “Code Llama”, its latest AI model which is designed to generate and analyze code snippets, in order to help find solutions.

As explained by Meta:

“Code Llama features enhanced coding capabilities. It can generate code and natural language about code, from both code and natural language prompts (e.g., “Write me a function that outputs the fibonacci sequence”). It can also be used for code completion and debugging. It supports many of the most popular programming languages used today, including Python, C++, Java, PHP, Typescript (Javascript), C#, Bash and more.”

The tool effectively functions like a Google for code snippets specifically, pumping out full, active codesets in response to text prompts.

Which could save a lot of time. As noted, while code knowledge is required for debugging, most programmers still search for code examples for specific elements, then add them into the mix, albeit in customized format.

Code Llama won’t replace humans in this respect (because if there’s a problem, you’ll still need to be able to work out what it is), but Meta’s more refined, code-specific model could be a big step towards better-facilitating code creation via LLMs.

Meta’s releasing three versions of the Code Llama base, with 7 billion, 13 billion, and 34 billion parameters respectively.

“Each of these models is trained with 500 billion tokens of code and code-related data. The 7 billion and 13 billion base and instruct models have also been trained with fill-in-the-middle (FIM) capability, allowing them to insert code into existing code, meaning they can support tasks like code completion right out of the box.”

Meta’s also publishing two additional versions, one for Python specifically, and another aligned with instructional variations.

As noted, while the current influx of generative AI tools are amazing in what they’re able to do, for most tasks, they’re still too flawed to be relied upon, working more as complimentary elements than singular solutions. But for technical responses, like code, where there is a definitive answer, they could be especially valuable. And if Meta’s Code Llama model works in producing functional code elements, it could save a lot of programmers a lot of time.

You can read the full Code Llama documentation here.