Today, Meta has published its latest ‘Widely Viewed Content Report’, which highlights the most-viewed organic content in Facebook Feeds by US-based users throughout the first quarter of 2022.

Meta came up with the report to counter the narrative that its algorithms help to amplify right-wing and extremist content, which is largely in response to this Twitter profile which highlights the most shared Facebook links each day, and has been widely quoted in such criticism.

The top-performing link posts by U.S. Facebook pages in the last 24 hours are from:

1. TMZ

2. 11Alive

3. E! News

4. Ben Shapiro

5. ABC News

6. TMZ

7. People

8. The Hollywood Reporter

9. Good Morning America

10. Bloomberg— Facebook’s Top 10 (@FacebooksTop10) May 15, 2022

Meta published its first Widely Viewed Content Report last August, and since then, it hasn’t really helped to dispel any such concerns, with many of the links included in its most-shared listings removed by Facebook’s moderators for violating platform policies.

So how does this latest update fare on this front?

Not great:

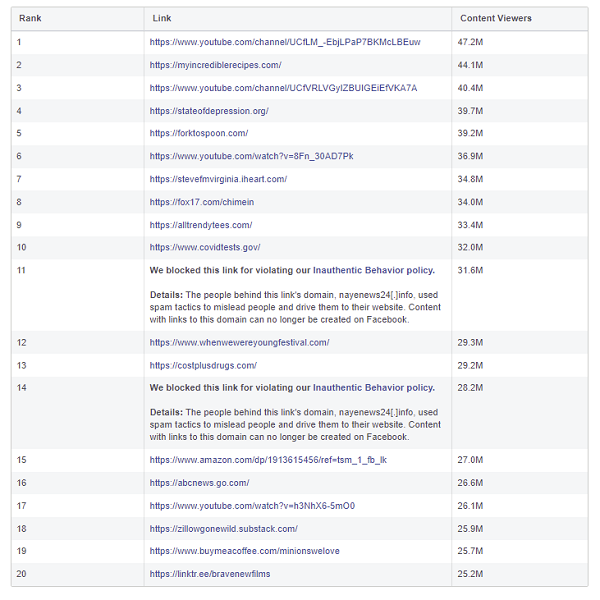

As you can see in this element, which lists the most widely viewed links from Facebook referrals in Q1, two of the top shared URLs were eventually found to be in violation of Facebook policy – after they’d gleaned a cumulative 60 million impressions via Facebook traffic.

That’s not ideal – but don’t worry, Meta has also updated its methodology on this element to ensure that it more accurately reflects what users are actually seeing in the app, with links that don’t render previews no longer being counted in this category moving forward.

The above listing uses the old methodology, while this listing uses the new process:

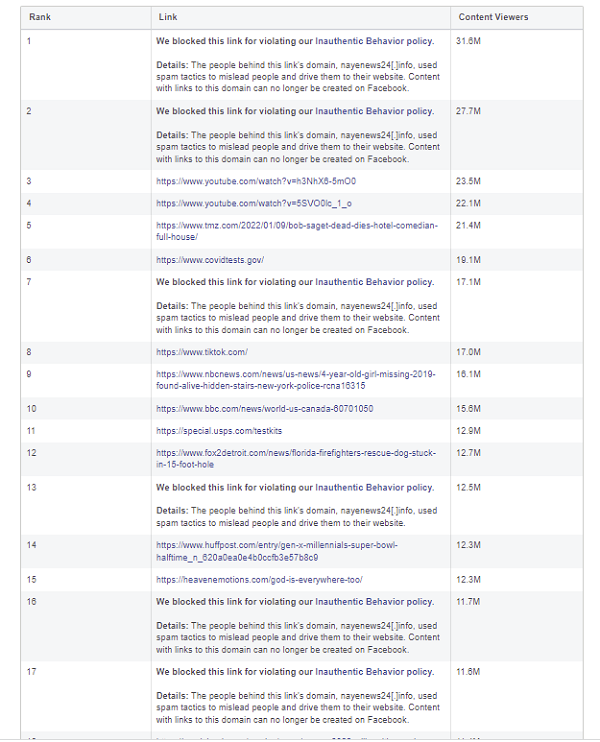

Oh. That’s not any better.

As you can see, 6 of the top 20 most shared links on Facebook in Q1 were eventually found to be in violation of Facebook’s policies, but they had already received a collective 112 million views before Facebook’s moderators removed them.

So the report shows that Meta is amplifying questionable content, but we have no way of knowing exactly what that content is or was because Meta has chosen not to report the details.

Though it did provide this explanation:

“In this report, there were pieces of content that have since been removed from Facebook for violating our policies of Inauthentic Behavior. The removed links were all from the same domain, and links to that domain are no longer allowed on Facebook.”

Further investigation has found that the domain in question is a spammy news site called Naye News, which has never appeared in Facebook’s listings before.

But Facebook itself chose not to report the full detail, avoiding the full context here.

So the value of the report is…?

This has been the key question about the report since its inception, with Meta actually scrapping an initial version of its Widely Viewed Content listing because it reinforced the existing criticisms of the app, rather than helped to dispute the negative impacts of Facebook’s amplification.

It’s hard to see this data doing anything else, with Facebook’s own internal insights showing that content against its own rules is getting huge reach, even if it is eventually removed.

In looking at the other links on this list, there are COVID conspiracy theories, Minion memes, political activist films, and ‘Zillow Gone Wild’.

It’s not great – and while Meta says that the most popular links ‘ranged from humor, culture, to DIY’, the truth, in its own data, is that misinformation, divisive content and other material that violates its own rules is being amplified by its systems.

Of course, Meta says that this is still only a fraction of what people see in its apps.

“Even though our most viewed content might have a very large number of content viewers, as measured as a percentage of all of Facebook content viewers, they represent only a small fraction of total views in Feed in the US that quarter. In short, it is uncommon for different people to see the same content in their Feed.”

That may be true, but the impact is still significant – and as we’ve noted previously the comparative flaw in this report, versus the daily top 10 most shared links listing, is that this is the most shared content over a three month period, when news stories will only be relevant day-to-day. Sure, you might see a recipe post get more clicks, cumulatively, over a month, but a divisive news story will only generate traffic for a tiny fraction of the time, making direct comparisons difficult.

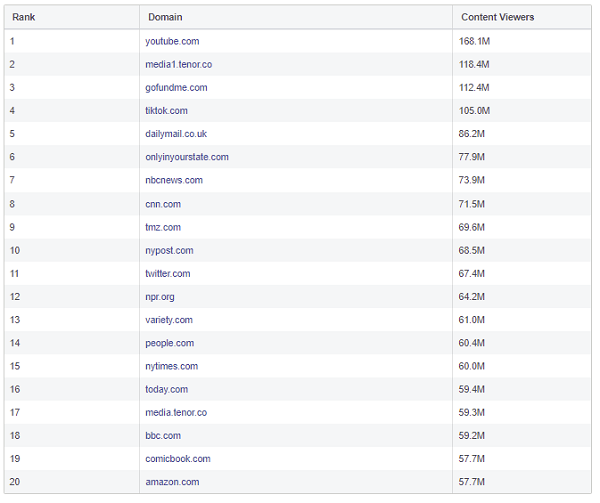

Meta does also share a listing of the most viewed domains to provide some transparency on this front, but the variability of the specific URLs within each also makes this hard to measure.

What YouTube clips were being shared? What TikTok clips? What tweets? In aggregate, this may show that, say, Fox News is not as popular as the daily Top 10 list may suggest. But it’s still not overly transparent as to what Facebook’s systems seek to amplify.

Which is the key element here. Meta’s essentially trying to shift the narrative that its algorithms amplify divisive, questionable, harmful content – yet its own data doesn’t really reflect that. The fact of the matter is that the content that performs best on Facebook is content that inspires emotional response, and anger is a key driver in inspiring engagement activity.

News publishers have shifted their approaches to lean into this, knowing that if they take a more partisan stance, that will trigger even more debate, and drive stronger sharing performance in the app. So while Meta may be keen to point out that such content ‘represents only a small fraction of total views in Feed’, the indisputable truth is that the entire news ecosystem has been changed by Meta’s algorithmic amplification, which incentivizes more divisive, argumentative and misleading takes.

Meta can try all that it wants to put its hands in the air and say that it’s a people problem, that it’s not responsible for what people share in its apps. But the attempt to counter these criticisms with its own, alternative, selective reportage is, as displayed in this data set, largely useless.

There are real problems with the online news ecosystem, and the incentive systems that digital platforms have embedded. Acknowledging such is a key step in finding solutions – whereas countering such in this form seems like a stubborn, protectionist approach that avoids the core problems at play.

You can read Meta’s Widely Viewed Content Report for Q1 2022 here.