Meta has published a new overview of its evolving efforts to combat coordinated influence operations across its apps, which became a key focus for the platform following the 2016 US Presidential Election, in which Russian-based operatives were found to be using Facebook to influence US voters.

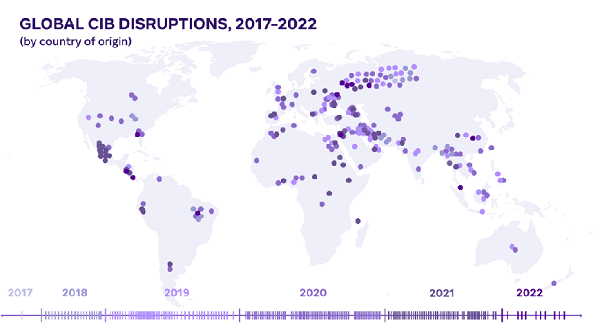

Since then, Meta says that it has detected and removed more than 200 covert influence operations, while also sharing information on each network’s behavior with others in the industry, so that they can all learn from the same data, and develop better approaches to tackling such.

As per Meta:

“Whether they come from nation states, commercial firms or unattributed groups, sharing this information has enabled our teams, investigative journalists, government officials and industry peers to better understand and expose internet-wide security risks, including ahead of critical elections.”

Meta says that it’s detected influence operations targeting over 100 different nations, with the United States being the most targeted country, followed by Ukraine and the UK.

That likely points to the influence that the US has over global policy, while it could also relate to the popularity of social networks in these regions, making it a bigger vector for influence.

In terms of where these groups originate from, Russia, Iran and Mexico were the three most prolific geographic sources of CIB activity.

Russia, as noted, is the most widely publicized home for such operations – though Meta also notes that while many Russian operations have targeted the US, more operations from Russia actually targeted Ukraine and Africa, as part of the nation’s global efforts to sway public and political sentiment.

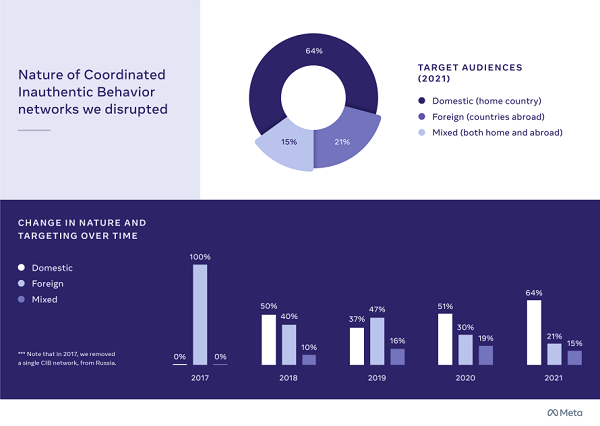

Meta also notes that, over time, more and more of these types of operations have actually targeted their own country, as opposed to a foreign entity.

“For example, we’ve reported on a number of government agencies targeting their own population in Malaysia, Nicaragua, Thailand and Uganda. In fact, two-thirds of the operations we’ve disrupted since 2017 focused wholly or partially on domestic audiences.”

In terms of how these operations are evolving, Meta notes that, increasingly, CIB groups are turning to AI-generated images, for example, to disguise their activity.

“Since 2019, we’ve seen a rapid rise in the number of networks that used profile photos generated using artificial intelligence techniques like generative adversarial networks (GAN). This technology is readily available on the internet, allowing anyone – including threat actors – to create a unique photo. More than two-thirds of all the CIB networks we disrupted this year featured accounts that likely had GAN-generated profile pictures, suggesting that threat actors may see it as a way to make their fake accounts look more authentic and original in an effort to evade detection by open source investigators, who might rely on reverse-image searches to identify stock photo profile photos.”

Which is interesting, particularly when you consider the steady rise of AI-generation technology, spanning from still images to video to text and more. While these systems will have valuable uses, there are also potential dangers and harms, and it’s interesting to consider how such technologies can be used to shroud inauthentic activity.

The report provides some valuable perspective on the scale of the issue, and how Meta’s working to tackle the ever-evolving tactics of scammers and manipulation operations online.

And they’re not going to stop – which is why Meta has also put out the call for increased regulation, as well as continued action by industry groups.

Meta’s also updating its own policies and processes in line with these needs, including updated security features and support options.

Which will also include more live chat capacity:

“While our scaled account recovery tools aim at supporting the majority of account access issues, we know that there are groups of people that could benefit from additional, human-driven support. This year, we’ve carefully grown a small test of a live chat support feature on Facebook, and we’re beginning to see positive results. For example, during the month of October we offered our live chat support option to more than a million people in nine countries, and we’re planning to expand this test to more than 30 countries around the world.”

That could be a big update, as anyone who’s ever dealt with Meta knows, getting a human on the line to assist can be an almost impossible task.

It’s difficult to scale such, especially when serving close to 3 billion users, but Meta’s now working to provide more support functionality, as another means to better protect people, and help them avoid harm online.

It’s a never-ending battle, and with the capacity to reach so many people, you can expect to see bad actors continue to target Meta’s apps as a means to spread their messaging.

As such, it’s worth noting how Meta is refining its approach, while also noting the scope of work conducted thus far on these elements.

You can read Meta’s full Coordinated Inauthentic Behavior Enforcements report for 2022 here.