Meta has published its Community Standards Enforcement Report for Q1 2022, which provides an overview of its ongoing efforts to detect misuse and abuse in its apps, and address such via evolving detection and removal processes.

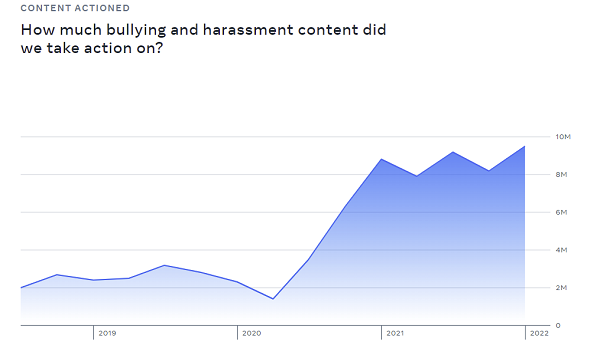

And those efforts do appear to be having an impact – first off, on bullying and harassment, Meta says that it increased its proactive detection rate on such content from 58.8% in Q4 2021 to 67% in Q1 2022, via improvement of its detection technology.

This is a key area of focus, particularly on Instagram, where research has shown that younger users can suffer serious psychological impacts from in-stream comments and criticisms, and abuse from peers. As such, it’s good to see Meta’s systems evolving in this respect, which could help to provide more support and assistance for those facing such challenges.

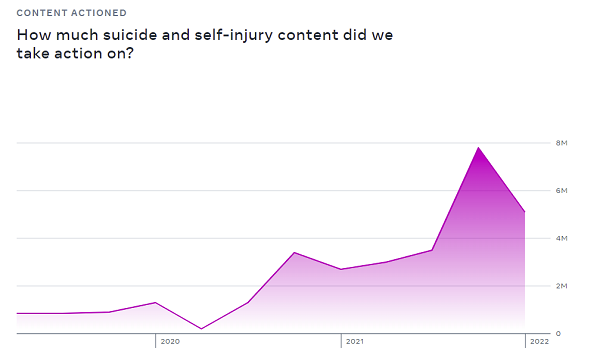

Though, concerningly, it has also seen a rise in suicide and self-harm content on Instagram over the past two quarters.

Meta also says that it removed 1.8 billion pieces of spam content in Q1, up from 1.2 billion in Q4 2021, ‘due actions on a small number of users making a large volume of violating posts’.

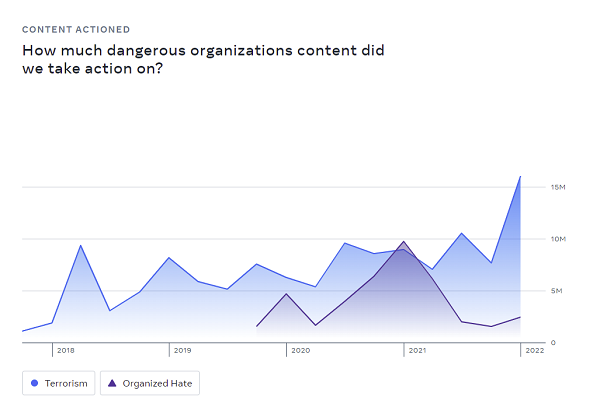

It’s also taking more action on terrorism and organized hate, with enforcement numbers increasing on both Facebook and Instagram.

Meta says that views of violating content that contains terrorism are very infrequent, with most removed before people see it.

“In Q1 2022, the upper limit was 0.05% for violations of our policy for terrorism on Facebook. This means that out of every 10,000 views of content on Facebook, we estimate no more than 5 of those views contained content that violated the policy.”

Even so, it is worth noting this rise in detection and enforcement, on both Facebook and IG.

Also, don’t show this to Elon:

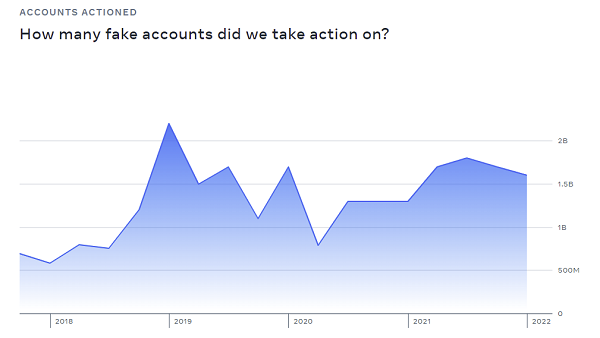

Meta removed 1.6 billion fake accounts in Q1 2022, while its rate of fake profiles remains steady.

“We estimate that fake accounts represented approximately 5% of our worldwide monthly active users (MAU) on Facebook during Q1 2022.”

Which is the exact same percentage of fake profiles that Twitter has reported forever, and has now become a key focus in Elon Musk’s takeover offer for the app.

It’s interesting to note that this 5% figure almost seems like an agreed upon industry norm, with the actual numbers impossible to fully determine. Similar to Twitter, Meta would likely conduct sampling to measure the rate of fakes, but it’ll be interesting to see if, when pressed, Twitter is forced to come up with a more accurate, in-depth profile of fake account activity in its app.

Either way, Meta’s stats are up to industry standard, according to a new audit by EY, which found that its enforcement metrics were ‘fairly stated’, and its internal controls are ‘suitably designed and operating effectively’.

That, presumably, applies to fake account numbers as well, which may mean that 5% is indeed an acceptable estimate, based on the data available.

Whether that provides more assurance or not is likely down to your personal perspective, but Meta has now had its data tracking processes independently assessed, which should add more weight to its numbers.

You can check out Meta’s full ‘Community Standards Enforcement Report’ for Q1 2022 here.