While it may not be leading the public charge on the generative AI front just yet, Meta is developing a range of AI creation options, which it’s been working on for years, but is only now looking to publish more of its research for public consumption.

That’s been prompted by the sudden interest in generative AI tools, but again, Meta has been developing these tools for some time, even though it looks somewhat reactive with its more recent launch schedule.

Meta’s latest generative AI paper looks at a new process that it’s calling ‘Image Joint Embedding Predictive Architecture’ (I-JEPA), which enables predictive visual modeling, based on the broader understanding of an image, as opposed to pixel matching.

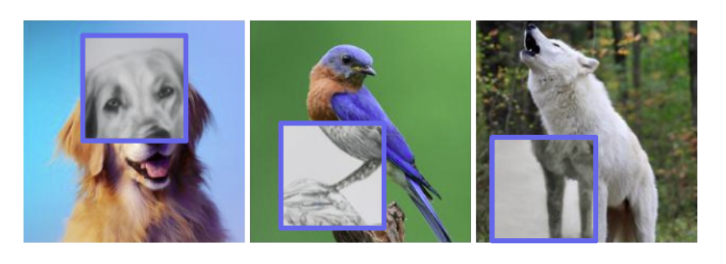

The sections within the blue boxes here represent the outputs of the I-JEPA system, showing how it’s developing better contextual understanding of what images should look like, based on fractional inputs.

Which is somewhat similar to the ‘outpainting’ tools that have been cropping up in other generative AI tools, like the below example from DALL-E, enabling users to build all new backgrounds to visuals, based on existing cues.

The difference in Meta’s approach is that it’s based on actual machine learning of context, which is a more advanced process that simulates human thought, as opposed to statistical matching.

As explained by Meta:

“Our work on I-JEPA (and Joint Embedding Predictive Architecture (JEPA) models more generally) is grounded in the fact that humans learn an enormous amount of background knowledge about the world just by passively observing it. It has been hypothesized that this common sense information is key to enable intelligent behavior such as sample-efficient acquisition of new concepts, grounding, and planning.”

The work here, guided by research from Meta’s Chief AI Scientist Jann LeCun, is another step towards simulating more human-like response in AI applications, which is the true border crossing that could take AI tools to the next stage.

If machines can be taught to think, as opposed to merely guessing based on probability, that will see generative AI take on a life of its own. Which freaks some people the heck out, but it could lead to all new uses for such systems.

“The idea behind I-JEPA is to predict missing information in an abstract representation that’s more akin to the general understanding people have. Compared to generative methods that predict in pixel/token space, I-JEPA uses abstract prediction targets for which unnecessary pixel-level details are potentially eliminated, thereby leading the model to learn more semantic features.”

It’s the latest in Meta’s advancing AI tools, which now also include text generation, visual editing tools, multi-modal learning, music generation, and more. Not all of these are available to users as yet, but the various advances highlight Meta’s ongoing work in this area, which has become a bigger focus as other generative AI systems have hit the consumer market.

Again, Meta may seem like it’s playing catch-up, but like Google, it’s actually well-advanced on this front, and well-placed to roll out new AI tools that will enhance its systems over time.

It’s just being more cautious – which, given the various concerns around generative AI systems, and the misinformation and mistakes that such tools are now spreading online, could be a good thing.

You can read more about Meta’s I-JEPA project here.