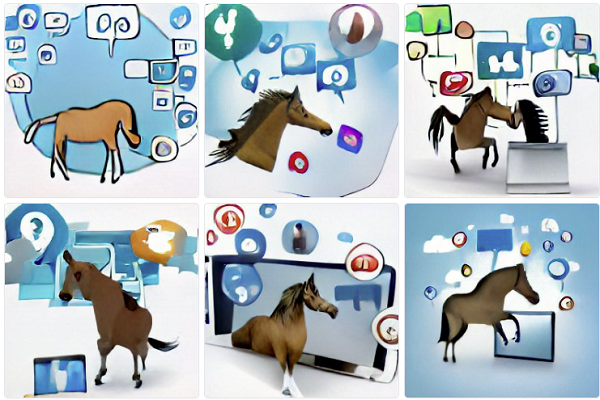

One of the more interesting AI application developments of late has been Dall-E, an AI-powered tool that enables you to enter in any text input – like ‘horse using social media’ – and it will pump out images based on its understanding of that data.

You’ve likely seen many of these visual experiments floating around the web (‘Weird Dall-E Mini Generations’ is a good place to find some more unusual examples), with some being highly useful, and applicable in new contexts. And others just being strange, mind-warping interpretations, which show how the AI system views the world.

Well, soon, you could have another way to experiment with AI interpretation of this type, via Meta’s new ‘Make-A-Scene’ system, which also uses text prompts, as well as input drawings, to create wholly new visual interpretations.

As explained by Meta:

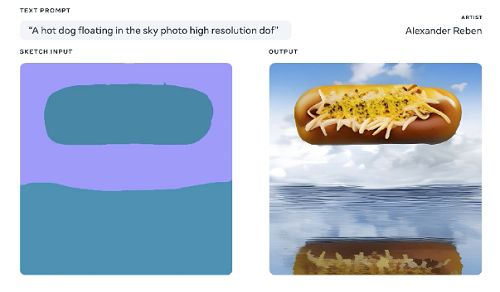

“Make-A-Scene empowers people to create images using text prompts and freeform sketches. Prior image-generating AI systems typically used text descriptions as input, but the results could be difficult to predict. For example, the text input “a painting of a zebra riding a bike” might not reflect exactly what you imagined; the bicycle might be facing sideways, or the zebra could be too large or small.”

Make a Scene seeks to solve for this, by providing more controls to help guide your output – so it’s like Dall-E, but, in Meta’s view at least, a little better, with the capacity to use more prompts to guide the system.

“Make-A-Scene captures the scene layout to enable nuanced sketches as input. It can also generate its own layout with text-only prompts, if that’s what the creator chooses. The model focuses on learning key aspects of the imagery that are more likely to be important to the creator, like objects or animals.”

Such experiments highlight exactly how far computer systems have come in interpreting different inputs, and how much AI networks can now understand about what we communicate, and what we mean, in a visual sense.

Eventually, that will help machine learning processes learn and understand more about how humans see the world. Which could sound a little scary, but it will ultimately help to power a range of functional applications, like automated cars, accessibility tools, improved AR and VR experiences and more.

Though, as you can see from these examples, we’re still some way off from AI thinking like a person, or becoming sentient with its own thoughts.

But maybe not as far off as you might think. Indeed, these examples serve as an interesting window into ongoing AI development, which is just for fun right now, but could have significant implications for the future.

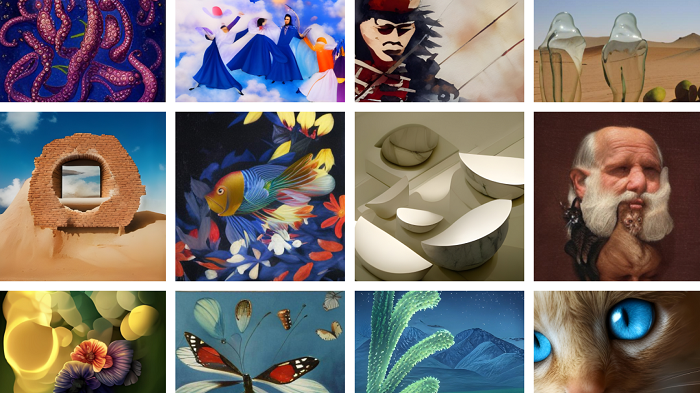

In its initial testing, Meta gave various artists access to its Make-A-Scene to see what they could do with it.

It’s an interesting experiment – the Make-A-Scene app is not available to the public as yet, but you can access more technical information about the project here.