This is not great.

With the US midterms fast approaching, a new investigation by human rights group Global Witness, in partnership with the Cybersecurity for Democracy team at NYU, has found that Meta and TikTok are still approving ads that include political misinformation, and are in clear violation of their stated ad policies.

In order to test the ad approval processes for each platform, the researchers submitted 20 ads each, via dummy accounts, to YouTube, Facebook and TikTok.

As per the report:

“In total we submitted ten English language and ten Spanish language ads to each platform – five containing false election information and five aiming to delegitimize the electoral process. We chose to target the disinformation on five ‘battleground’ states that will have close electoral races: Arizona, Colorado, Georgia, North Carolina, and Pennsylvania.”

According to the report summary, the ads submitted clearly contained incorrect information that could potentially stop people from voting – ‘such as false information about when and where to vote, methods of voting (e.g. voting twice), and importantly, delegitimized methods of voting such as voting by mail’.

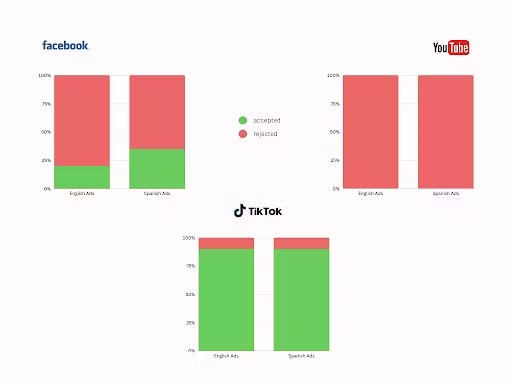

The results of their test were as follows:

- Facebook approved two of the misleading ads in English, and five of the ads in Spanish

- TikTok approved all of the ads but two (one in English and one in Spanish)

- YouTube blocked all of the ads from running

In addition to this, YouTube also banned the originating accounts that the researchers had been using to submit their ads. Two of their three dummy accounts remain active on Facebook, while TikTok hasn’t removed any of their profiles (note: none of the ads were never launched).

It’s a concerning overview of the state of play, just weeks out from the next major US election cycle – while the Cybersecurity for Democracy team also notes that it’s run similar experiments in other regions as well:

“In a similar experiment Global Witness carried out in Brazil in August, 100% of the election disinformation ads submitted were approved by Facebook, and when we re-tested ads after making Facebook aware of the problem, we found that between 20% and 50% of ads were still making it through the ads review process.”

YouTube, it’s worth noting, also performed poorly in its Brazilian test, approving 100% of the disinformation ads tested. So while the Google-owned platform looks to be making progress in with its review systems in the US, it does still seemingly have work to do in other regions.

As do the other two apps, and for TikTok in particular, it could further deepen concerns around how the platform could be utilized for political influence, adding to the various questions that still linger around its potential ties to the Chinese Government.

Earlier this week, a report from Forbes suggested that TikTok’s parent company ByteDance had planned to use TikTok to track the physical location of specific American citizens, essentially utilizing the app as a spy tool. TikTok has strongly denied the allegations, but it once again provokes fears around TikTok’s ownership and connection with the CCP.

Add to that recent reportage which has suggested that around 300 current TikTok or ByteDance employees were once members of Chinese state media, that ByteDance has shared details of its algorithms with the CCP, and that the Chinese Government is already using TikTok as a propaganda/censorship tool, and its clear that many concerns still linger around the app.

Those fears are also no doubt being stoked by big tech powerbrokers who are losing attention, and revenue, as a result of TikTok’s continued rise in popularity.

Indeed, when asked about TikTok in an interview last week, Meta CEO Mark Zuckerberg said that:

“The notion that an American company wouldn’t just obviously be working with the American government on every single thing is completely foreign [in China], which I think does speak at least to how they’re used to operating. So I don’t know what that means. I think that that’s a thing to be aware of.”

Zuckerberg resisted saying that TikTok should be banned in the US as a result of these connections, but noted that ‘it’s a real question’ as to whether it should be allowed to continue operating.

If TikTok’s found to be facilitating the spread of misinformation, especially if that can be linked to a CCP agenda, that will be another big blow for the app. And with the US Government still assessing whether it should be allowed to continue operating in the US, and tensions between the US and China still simmering, there is still a very real possibility that TikTok could be banned entirely, which would spark a massive shift in the social media landscape.

Facebook, of course, has been the key platform for information distribution in the past, and the main focus of previous investigations into political misinformation campaigns. But TikTok’s popularity has also now made it a key source for information, especially among younger users, which enhances its capacity for influence.

As such, you can bet that this report will raise many eyebrows in various offices in DC.

In response to the findings, Meta posted this statement:

“These reports were based on a very small sample of ads, and are not representative given the number of political ads we review daily across the world. Our ads review process has several layers of analysis and detection, both before and after an ad goes live. We invest significant resources to protect elections, from our industry-leading transparency efforts to our enforcement of strict protocols on ads about social issues, elections, or politics – and we will continue to do so.”

TikTok, meanwhile, welcomed the feedback on its processes, which it says will help to strengthen its processes and policies.

It’ll be interesting to see what, if anything, comes out in the wash-up from the coming midterms.