In January 2021, in the wake of the Capitol Riots, and after years of debate over the impact that political content was having on Facebook users, Meta CEO Mark Zuckerberg had decided enough was enough.

As Zuckerberg noted in a Facebook earnings call on January 27th:

“One of the top pieces of feedback we’re hearing from our community right now is that people don’t want politics and fighting to take over their experience on our services.”

Political content had become so divisive, that Zuckerberg directed his engineering teams to effectively cut political content out of people’s News Feeds altogether, in order to focus on things that made people happier, with a view to getting Facebook usage growth back on track.

And Facebook’s team did. You may or may not have noticed, but political content has reduced in Facebook feeds, significantly so over the past 12 months. But in the end, Meta found that Facebook engagement didn’t increase as a result.

As it turns out, a lot of people do like political debates, even if they feel divisive.

These findings have been revealed in new documents obtained by The Wall Street Journal, which outline how Meta went about culling political content from Facebook feeds, then gradually adding it back in, in response to engagement shifts.

As WSJ notes, the demotion of political content in the app actually had various negative consequences in relation to Meta’s business:

“Views of content from what Facebook deems ‘high quality news publishers’ such as Fox News and CNN fell more than material from outlets users considered less trustworthy. User complaints about misinformation climbed, and charitable donations via the company’s fundraiser product through Facebook fell in the first half of 2022.”

In addition to this, WSJ reports, Facebook users simply didn’t like it.

The long-held criticism of Facebook in this respect has been that it benefits from political debates in the app, because they spark engagement, with people more likely to comment and share posts that they have a passionate response to, one way or another, which often means that argumentative, controversial takes gain more traction than balanced reports and content.

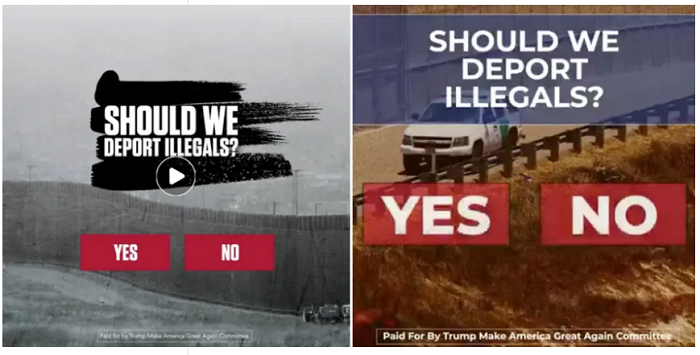

Which has made it the perfect breeding ground for more extreme political candidates to gain traction. As former US President Donald Trump showed, taking a hardline stance on controversial issues will certainly get you attention, with Trump using Facebook ads, in particular, as a key weapon to provoke people’s fears, and ideally, win their votes.

The downside of this is that such toxicity also pushes some audiences further away, and as Zuckerberg notes, a lot of Facebook users have indicated that they’ve had enough of the politics-based fights in the app, with their relatives and former school mates using the platform to push their controversial opinions, which are then displayed in amongst birthday wishes, marriage announcements, etc.

Evidently, though, Meta needs to maintain a balance – which it is trying to do:

“Meta now estimates politics accounts for less than 3% of total content views in users’ newsfeed, down from 6% around the time of the 2020 election, the documents show. But instead of reducing views through indiscriminate suppression or heavy-handed moderation, Facebook has altered the newsfeed algorithm’s recommendations of sensitive content toward what users say they value, and away from what simply makes them engage, according to documents and people familiar with the efforts.”

That last element is important – Facebook has altered the feed algorithm to better promote what users say that they value, as opposed to what makes them engage.

This is a key distinction, which is almost impossible to get right. Meta, of course, would have its own metrics for ‘value’ in this context, and the actions that people take which demonstrate value, as opposed to straight engagement.

Time spent reading, link clicks, shares – all of these could be more indicative of ‘value’ as opposed to emotional engagement. But optimizing for maximum usage, while also managing this element, is a difficult balance, which no platform has got 100% right as yet.

TikTok’s algorithm is arguably closest to providing the best pure entertainment, based on your interests. But even then, TikTok has also been used to promote political content at times, and has its own concerns in regards to the suppression and promotion of certain posts.

The bottom line, however, is that Facebook’s algorithm is now looking to optimize for different elements, and that simply sparking emotional response may not be as effective as it once was. Unfortunately, we don’t know the alternative measures that will help you maximize reach and resonance in the app, but it’s worth noting the News Feed algorithm shift in this respect, and matching that against your own efforts in the app.