This is not good for Meta, and its ongoing efforts to police illegal content, nor for the billions of users of its apps.

According to a new investigation conducted by The Wall Street Journal, in conjunction with Stanford University and the University of Massachusets, Instagram has become a key connective tool for a ‘vast pedophile network’, with its members sharing illegal content openly in the app.

And the report certainly delivers a gut punch in its overview of the findings:

“Instagram helps connect and promote a vast network of accounts openly devoted to the commission and purchase of underage-sex content. Pedophiles have long used the internet, but unlike the forums and file-transfer services that cater to people who have interest in illicit content, Instagram doesn’t merely host these activities. Its algorithms promote them. Instagram connects pedophiles and guides them to content sellers via recommendation systems that excel at linking those who share niche interests.”

That description would have been a cold slap in the face for members of Meta’s Trust and Safety team when they read it in WSJ this morning.

The report says that Instagram facilitates the promotion of accounts that sell illicit images via ‘menus’ of content.

“Certain accounts invite buyers to commission specific acts. Some menus include prices for videos of children harming themselves and ‘imagery of the minor performing sexual acts with animals’, researchers at the Stanford Internet Observatory found. At the right price, children are available for in-person ‘meet ups’.”

The report identifies Meta’s reliance on automated detection tools as a key impediment to its efforts, while also highlighting how the platform’s algorithms essentially promote more harmful content to interested users through the use of related hashtags.

Confusingly, Instagram even has a warning pop-up for such content, as opposed to removing such outright.

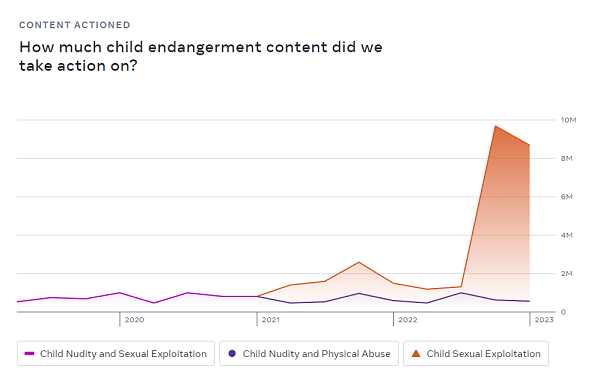

It’s certainly a disturbing summary, which highlights a significant concern within the app – though it’s also worth noting that Meta’s own reporting of Community Standards violations also showed a significant increase in enforcement actions in this area of late.

That could suggest that Meta is aware of these issues already, and that it is taking more action. But either way, as a result of this new report, Meta has vowed to take more action to address these concerns, with the establishment of a new internal taskforce to uncover and eliminate these and other networks.

The issues here obviously expand beyond brand safety, with far more important, and impactful action needed to protect young users. Instagram is very popular with young audiences, and the fact that at least some of these users are essentially selling themselves in the app – and that a small team of researchers uncovered this, when Meta’s systems missed it – is a major problem, which highlights significant flaws in Meta’s process.

Hopefully, the latest data within the Community Standards Report is reflective of Meta’s broader efforts to address such – but it’ll need to take some big steps to address this element.

Also worth noting from the report – the researchers found that Twitter hosted far less CSAM material in its analysis, and that Twitter’s team actioned concerns faster than Meta’s did.

Elon Musk has vowed to address CSAM as a top priority, and it seems, at least from this analysis, that it could actually be making some advances on this front.