After testing them out with selected users over the past few weeks, TikTok is now officially launching its new in-stream labels for AI-generated content, which will provide an extra level of transparency in the app.

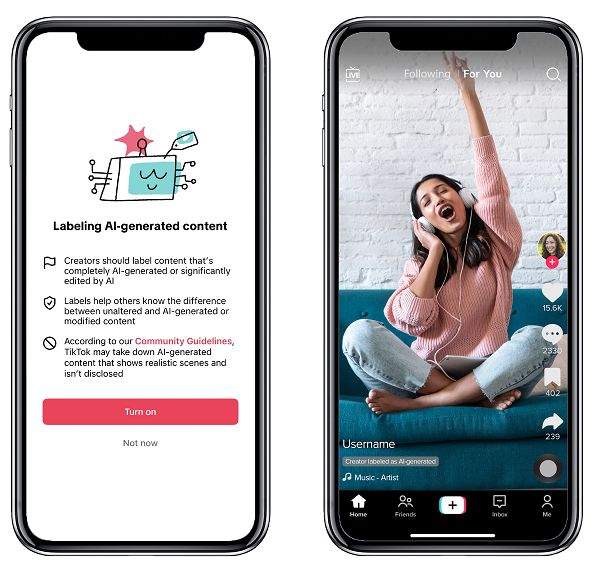

As you can see in this example, TikTok will now require users who’ve used AI to create a post in the app to flag it as such, or risk having their content removed if they fail to do so.

In the second image above, you can see the new “Creator labeled as AI-generated” tag, which will help to avoid confusion, while also limiting the spread of misinformation within the app.

As per TikTok:

“AI enables incredible creative opportunities, but can potentially confuse or mislead viewers if they’re not aware content was generated or edited with AI. Labeling content helps address this, by making clear to viewers when content is significantly altered or modified by AI technology. That’s why we’re rolling out a new tool for creators to easily inform their community when they post AI-generated content.”

Which is an important step. We’ve already seen confusion around a range of AI-generated images, from The Pope in a puffer jacket, to a fake explosion outside The Pentagon. And as generative AI tools continue to evolve, this will only get worse, which is why all platforms need to get ahead of such as best they can right now, to limit any harmful impacts.

That was the main focus of the recent AI regulation meeting in Washington, which saw all of the top tech execs in attendance. The consensus coming out of that meeting was that regulation does need to be implemented, but it remains to be seen whether new laws can be rolled out in time to mitigate the impacts of the fast-moving tech.

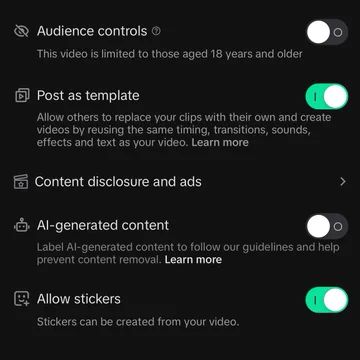

TikTok actually updated its official rules on this element back in March, but it’s now stepping up its enforcement, with a dedicated tag for AI-generated content that creators can activate within the upload flow.

TikTok becomes the first platform to officially add a specific AI-generated tag, with Instagram also developing its own AI content labels, which it too will likely make a posting requirement. YouTube’s also developing new tools to deal with the expected “AI tsunami”, while X, thus far, has put its reliance on Community Notes to help keep users informed of artificial content.

“The new label will help creators showcase the innovations behind their content, and they can apply it to any content that has been completely generated or significantly edited by AI. It will also make it easier to comply with our Community Guidelines’ synthetic media policy, which we introduced earlier this year. The policy requires people to label AI-generated content that contains realistic images, audio or video, in order to help viewers contextualize the video and prevent the potential spread of misleading content. Creators can now do this through the new label (or other types of disclosures, like a sticker or caption).”

It’s a good update, which will help to keep users informed of AI manipulation in-stream.

And with more and more AI tools being developed each day, the hope is that all platforms will be able to implement similar tools, and potentially AI image detectors as well (much harder to integrate), to help combat misuse.