TikTok has shared its Community Guidelines Enforcement Report for Q1 2022 (Jan-Mar), which provides an overview of the key reasons as to why content is being removed from the app, how many posts are being removed in total, and how TikTok’s systems are working to minimize exposure to harmful content.

And as you would expect, given its ongoing growth, TikTok is now removing more content than ever, with sexualized content and fake accounts being among the most commonly detected violations.

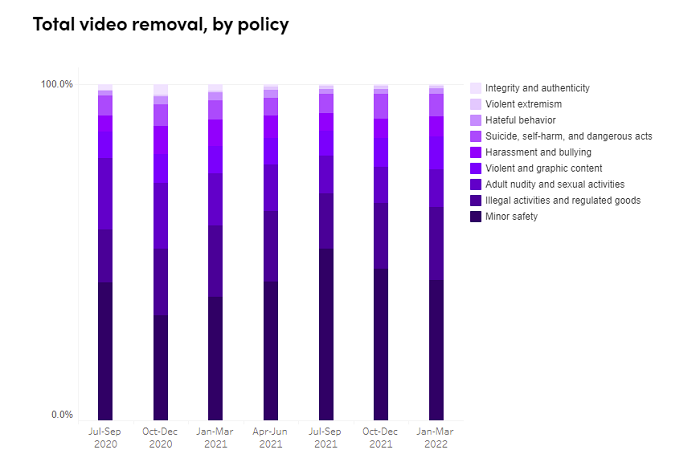

In total, TikTok removed over 102 million videos in Q1 2022, with ‘minor safety’ being the top reason for content removals by a big margin.

As you can see here, ‘nudity and sexual activity’ was another key reason for content removals, which underlines the ongoing concern that TikTok essentially incentivizes more risque content, especially from young girls, as part of its lure to keep people scrolling through their ‘For You’ feed.

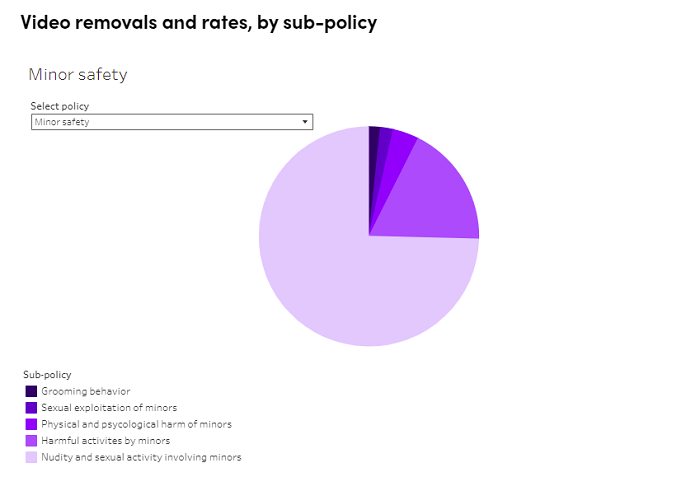

The main element within the ‘Minor safey’ segment was ‘nudity and sexual activity involving minors’, of which, TikTok says more than 92% were removed before any users even saw them.

Which is a positive – but again, while TikTok’s systems are always improving, and getting better at removing such material, the bigger concern here is that users are looking to post such to the app at all, which is an issue that may require more consideration and analysis from relevant authorities.

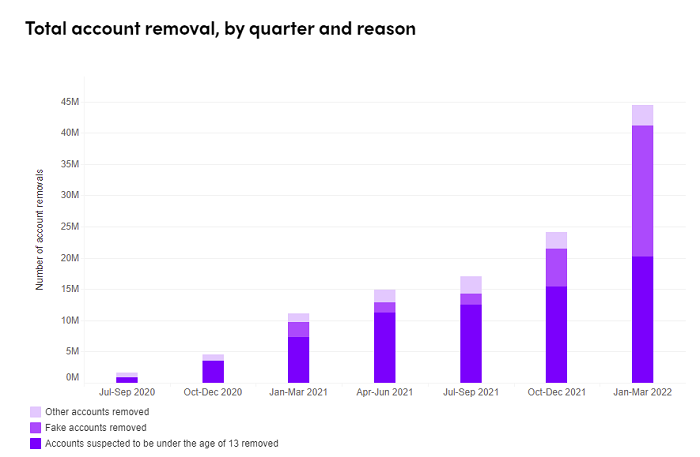

In terms of account removals, fake accounts were the biggest contributor, with almost 21m fake profiles removed in the period.

Which is a big jump. As the chart shows, TikTok removed more than 3x as many fake profiles as the previous quarter – which makes sense, given that more people are signing up to the app, and as such, more spammers and scammers are also looking to get in on the action. But it also likely means that more fake follower sellers are moving in.

Be wary of relying on influencers’ follower metrics as a proxy for engagement and reach in the app.

TikTok has also shared insights into its efforts to detect and remove misinformation around the Russian invasion of Ukraine, with 41,191 videos removed in relation to the conflict within the period. TikTok further notes that it added fact-check prompts to 5,600 videos, while it also identified and removed 6 networks, and 204 accounts, for coordinated efforts to influence public opinion and mislead users about their identities.

Which is especially interesting when you also consider China’s tacit support for Russia’s actions in Ukraine, and given TikTok’s Chinese ownership, how its efforts to address this element correlate with the persisting narrative that the app could essentially be controlled by the CCP.

That’s not to say that the Chinese Government doesn’t still exert some level of control over TikTok’s operations, as such, but it is interesting that TikTok has gone to expanded efforts to tackle Russian-originated misinformation within the conflict.

Overall, TikTok’s enforcement numbers are in line with ongoing trends, especially in relation to its global expansion, and rising usage. But there are some notes of concern too, and while TikTok says that its systems are improving, there will always be some things that slip through the cracks.

More activity in any element also means that more is getting into people’s feeds, and the areas highlighted here could be significant issues moving forward.

You can read TikTok’s full Community Guidelines Enforcement Report for Q1 2022 here.