How do you test for ‘human-ness’ in social media activity, and is there actually a way that you can do so without it being gamed by scammers?

It seems that Twitter believes it can, with this new message discovered in the back-end code of the app.

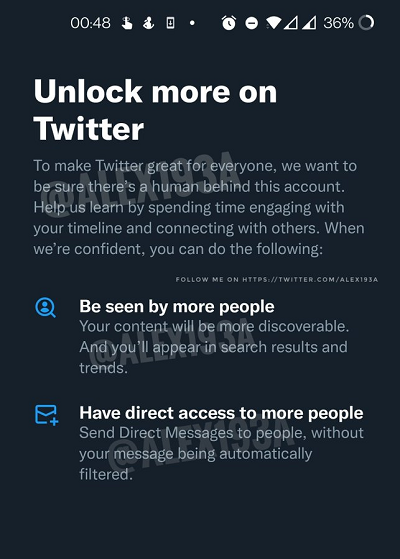

As you can see in this notification, uncovered by app researcher Alessandro Paluzzi, Twitter’s working on a new process that would seemingly restrict users from utilizing certain elements of the app till they’re able to prove that they’re actually human – ‘by spending time engaging with your timeline and connecting with others’.

If you pass the human test, you’ll then see improved tweet visibility, while you’ll also get full access to its DM tools.

But again, what that human test might entail could be complex. What actions can a person take that a bot can’t, and how might Twitter be able to measure such, in, you’d assume, an automated way?

You’d assume that Twitter won’t be able to reveal such, as that would definitely open it up to scammers. But there’s also not much that a regular user can do that a bot can’t. You can program a bot account to follow/unfollow people, re-tweet others, send out tweets.

Seems like it would be pretty difficult to pinpoint bot activity – but new Twitter chief Elon Musk is determined to combat the bot problem in the app, which is so bad that, at one stage, Musk sought to end his Twitter takeover deal entirely, due the platform being riddled with bot accounts.

Back in August, Musk’s legal team filed a motion which claimed that 27% of Twitter’s mDAU count – or just over 64 million users – were actually bots, while only 7% of actual, human Twitter users were seeing the majority of its ads.

Twitter has long held that bots/spam only make up 5% of its user count, but Musk’s team had questioned the methodology of this process, which saw Twitter only measuring a random sample of 9k accounts within each reporting period.

Since taking over, however, Musk has touted Twitter’s user counts, which are now, according to Musk, at record highs. But by his own team’s estimation, it does seem like bots would still make up a significant proportion of those profiles, which is why the Twitter 2.0 team is keen to implement new measures to weed out the bots, and provide more assurance to ad partners, and users.

That includes improved bot detection and removal (Musk says that Twitter removed a heap of bot accounts over the last week, and has since scaled back its bot detection thresholds after also removing a raft of non-bot accounts), while it would also incorporate Twitter’s new $8 verification scheme, which implements what Musk is calling ‘payment verification’ – i.e. bots and scammers aren’t going to pay to register their accounts, so the program will also effectively highlight actual humans.

And if it sees enough take-up, that could be another key step in combating bots, with the majority of real users eventually displaying a verified check. The likelihood of enough people signing up to make this a viable consideration in this respect is questionable, but in theory, this could be another element in Twitter’s bot squeeze.

The finding also comes as Twitter confirms that it’s now leaning more heavily into automation to moderate content, after its recent staff cull, which included thousands from its moderation teams.

In order to manage more, with less human oversight, Twitter’s reducing manual review of cases, which has seen it taking down more content in certain areas even faster, including, reportedly, child exploitation material.

Increased reliance on automation will inevitably also lead to more incorrect reports and actions, but Twitter’s now erring on the side of caution, with the team more empowered to ‘move fast and be as aggressive as possible’ on these elements, according to the platform’s Vice President of Trust and Safety Ella Irwin.

Which could be a good thing. It’s possible that with advances in automated detection, that maybe less manual intervention is now required, which could make this a more viable pathway than it would have been in times past. But time will tell, and we won’t know the full impacts of Twitter’s new approach till we have solid data on the instances of offending material. Which we might never get, now that the company is in private ownership and not beholden to report on such to the market.

Third party reports have suggested that the incidence of hate speech has increased significantly since Musk took over at the app, while child safety experts claim that the changes implemented by Musk’s team have had little impact as yet on addressing this element.

Still, Musk is hoping that by adding another level of accountability, via human verification, that will better enable his team to tackle such, but again, it remains to be seen exactly how its systems might measure ‘human-ness’, and how effective that might be in stifling bot activity.

In theory, all of these various options could work, but the history of social apps and content moderation would suggest that this is not going to be easy, and there’s no singular solution to address the key issues and elements at hand.

Which the new Twitter team is acknowledging, by implementing a range of measures – and it’ll be interesting to see if there is actually a way to combat these key concerns without the use of brigades of moderation teams, who are tasked with sorting through the worst of humanity every day.

Implementing a ‘human’ test will be tough, but maybe, through a combination of insights from Musk’s various companies and associates, there’s something that can be added into the mix to advance the systems on hand.