It’s no secret that Twitter can be a cruel and unforgiving platform for those that tweet the wrong thing – whatever that may be. Some use this to advantage, with many media personalities and politicians now posting divisive comments as a means to boost their own presence, and remain top of mind. But for others, the tweet backlash can get overwhelming fast, which is why Twitter has been working to provide more ways for users to control their in-app experience, and limit negative interactions where possible.

And it could be close to releasing another new element on this front.

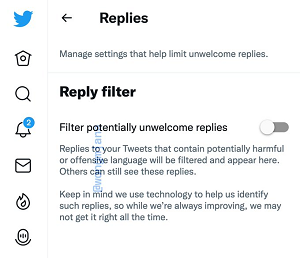

As you can see in this example, posted by app researcher Jane Manchun Wong, Twitter’s currently developing a new ‘Reply filter’ option, which would enable users to reduce their exposure to tweets that include ‘potentially harmful or offensive language’ as identified by Twitter’s detection systems.

As noted in the description, the filter would only stop you from seeing those replies, so others would still be able to view all responses to your tweets. But it could be another way to avoid unwanted attention in the app, which may make it a more enjoyable experience for those who’ve simply had enough of random accounts pushing junk responses their way.

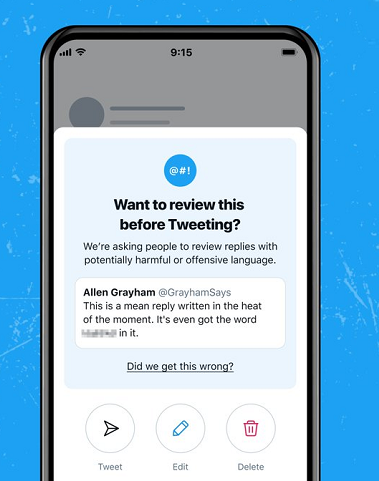

The system would presumably utilize the same detection algorithms as Twitter’s offensive reply warnings, which it re-launched in February last year, after shelving the project during the 2020 US Election.

Twitter says that these prompts have proven effective, with users opting to change or delete their replies in 30% of cases where these alerts were shown.

That suggests that many Twitter users don’t intentionally seek to offend or upset others with their responses, with even a simple pop-up like this having a potentially significant effect on platform discourse, and improving engagement via tweet.

Of course, that also means that 70% of people didn’t agree with Twitter’s automated assessment of their comments, and/or are not concerned about offending people. Which rings true – as noted, Twitter can still be a pretty unrelenting platform for those in the spotlight (actor Tom Holland recently announced that he’s taking a break from the app due to it being ‘very detrimental to my mental state’). But still, a 30% reduction in potential tweet toxicity is significant, and this new option, which would likely utilize the same identifiers and algorithms, could add to that in another way.

As such, it’s, at the least, a worthy experiment from Twitter, providing even more ways for users to control their in-app experience.

There’s no word on an official release as yet, but based on the latest examples posted by Wong, it looks as though could be coming soon.