X is rolling out some new tweaks to its Community Notes user moderation process, including an updated alerts feed to prompt more note ratings, and an updated approach to the scoring algorithm to improve note stability.

First off, on note rating. The Community Notes system is based on a range of inputs from contributors for each note that’s appended to a post within the app. For each note, X invites Community Notes participants to rate the information presented, and once a note has enough votes, from people on both sides of the political spectrum, only then is it displayed to all users in the app.

Now, X is looking to better highlight notes that need more reviews, in order to boost responses on note details.

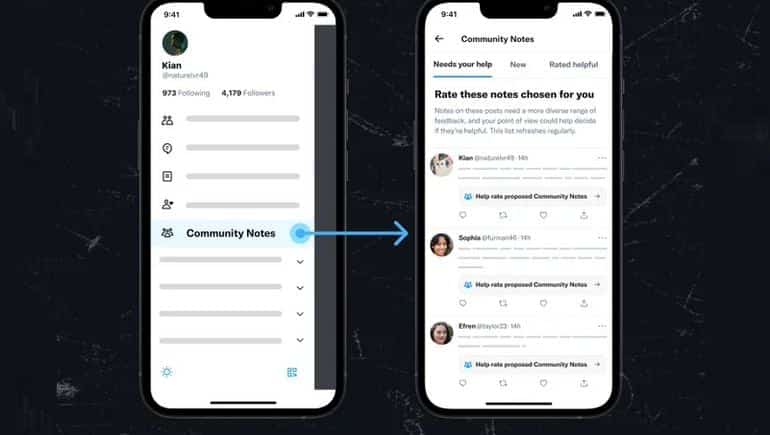

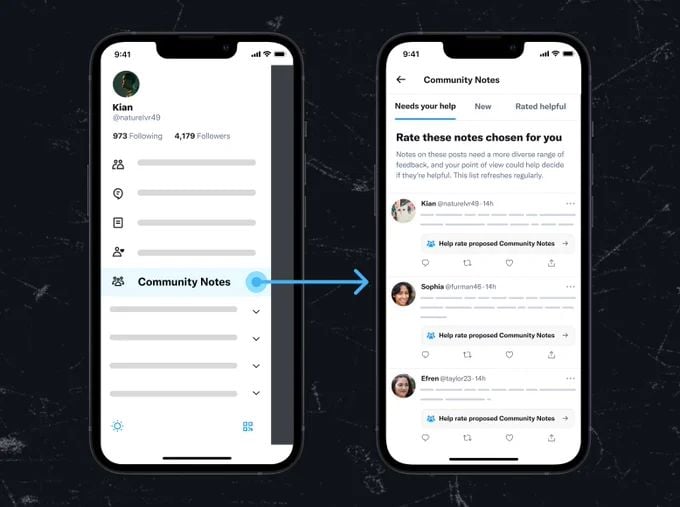

As you can see in this example, now, Community Notes contributors will see an updated “Needs Your Help” timeline in the Community Notes section, which will showcase added notes that require more ratings.

That’ll see more notes checked, even faster, which could lead to more then being shown in the app.

X has also updated its Community Notes scoring algorithm to reduce the amount of Community Notes that appear and disappear in the app.

Because Community Notes are shown based on consensus (or not), the votes for and against each can change significantly over time, which means that some posts are “noted”, then that note disappears as more votes change the balance.

This was especially evident on Elon Musk’s recent post about potentially removing the blocking option in the app.

True, however that note is incorrect and the community already voted it away

— Elon Musk (@elonmusk) August 19, 2023

X says that this new approach will reduce the number of notes that are shown in the app, but it’ll also increase the likelihood that displayed notes will stick, even as more ratings come in.

Among the various changes that Elon Musk has implemented at X, the app now formerly known as Twitter, Community Notes is the one that seems to hold the most promise for broader adoption, and offering an alternative solution to platform moderation concerns.

For years, social apps have been trying to work out how they can better align with community expectations around such, while also limiting the amount that they need to step in to make moderation rules and decisions. Because despite more recent accusations, none of them actually want to interfere, because more speech, and specifically, more divisive speech, is actually good for business, as it drives more intense discussion, more debate, and more engagement in their apps.

Meta’s chief Mark Zuckerberg has repeatedly noted the importance of free expression, whether we want to hear what’s being said or not, while Elon Musk has also been a vocal proponent of free speech, in all forms (well, all forms that don’t impact him personally at least).

Free speech is not only a solid moral foundation to build such platforms around (or make a stand on), it’s also better for their bottom line, so the platforms will logically gravitate towards any solution that enables them to reduce their interference, which Community Notes could facilitate.

The only other viable, community-led moderation approach that’s worked has been up and downvotes, with every platform has also experimented with.

Reddit has seen significant success with this model, yet, at the same time, Reddit is now also facing challenges due to its over-reliance on user moderation, as it works to build on its business opportunities.

Eventually, Reddit may have to actually employ more moderators to do the job, as volunteers revolt against the platform’s decisions, while also looking for their share of ad intake, since they’re doing all the moderation work.

But at a base level, up and downvotes do provide an immediate audience response on platform content, which can also act as a moderation device, of sorts, even if it is an imperfect solution.

Community Notes is more specifically aligned with moderation calls, and ensuring a broad set of user viewpoints is incorporated into the results. There are limitations with this too, specifically in regards to how divisive issues are addressed, given that broad agreement is required to have a Community Note displayed.

On some of the most controversial topics, that agreement will never be met, which subsequently means that Community Notes will never be shown on these posts. Which then puts the onus back on X’s human moderation team once again, but for more broadly accepted violations, like fake images, scam ads, false representation, all of these are being debunked and highlighted by Community Notes.

Which shows promise, and it could be that, eventually, X will be able to refine its system to a point where this is a real solution for many content concerns.

It’s not there yet, but it is interesting to see how Community Notes is evolving, and where it can be helpful in addressing these elements.