While it’s unlikely to be the moderation solution that Elon Musk seems to portray, X continues to develop its Community Notes contextual information feature, which enables X users that are approved contributors to the program to add additional explainers and reference points to any post in the app, helping to improve understanding, and limit the spread of misinformation.

Over the past week, X has added expanded note exposure to maximize response, and notifications for when a note that a user’s created gets deleted. And now, it’s making it easier for users to find notes, with a new Community Notes module for X Premium subscribers.

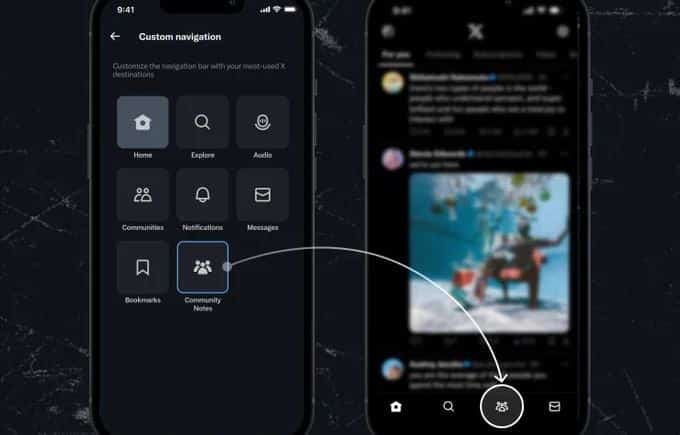

X Premium Subscribers can now pin Community Notes in their mobile app’s navigation for quicker access to browse and rate proposed notes. Not a Premium subscriber? No worries! We’re building a way for you to pin Community Notes too. Stay tuned! pic.twitter.com/YnQ5F7OqnX

— Community Notes (@CommunityNotes) September 18, 2023

That’ll further boost notes exposure, by enabling users to see more notes that have been added, helping to dispel false information before it can gain any traction.

And as X notes, it’s also developing the same listings for non-paying users as well, which will make it much easier to see which notes have been added, and what subjects are getting the most focus from the Community Notes team at any given time.

It’s a good update, for what, in general, is a good system, that is helping to keep users more informed about questionable claims and content in the app.

Though there are flaws in the process, which see many Community Notes failing to reach public view on some of the most divisive topics.

Recent analysis conducted by Poynter Institute found that the vast majority of the Community Notes are never actually seen by users in the app, due to the way in which the Community Notes review system is structured, which requires consensus from users of opposing perspectives in order to be displayed.

As explained by Poynter’s Alex Mahadevan:

“Essentially, [Community Notes] requires a cross-ideological agreement on truth, and in an increasingly partisan environment, achieving that consensus is almost impossible.”

X determines a Notes contributor’s political leaning based on past behavior in the app, and the system then requires responses from both sides in order to approve a note.

Poynter’s analysis suggests that this approach is useful for highlighting “low-stakes” content, like clarifying satire, or highlighting AI-generated image, things that everybody is generally in agreement on. But some of the most harmful misinformation, along more divisive lines (e.g. COVID vaccine impacts, election interference, gender debate), is unikely to get the required consensus.

Thus, the majority of Community Notes, where they’re most needed, are not being displayed.

Maybe that’s by design, and maybe Musk and his team don’t see this as a major concern, as some of the more contentious debates are what X is all about, giving all people, of all persuasions, the opportunity to share their thoughts. And if there’s no definitive agreement on what’s right or wrong, that could actually be a good thing in helping to spark more debate and conversation, and maybe, through such, we could actually come to a more enlightened view by seeing things from different perspectives.

That’s the optimistic view of social media, but evidence has shown that this is simply not how things work, and rather than become more accepting, exposure to perspectives that differ from our own actually further entrench our previously held beliefs.

And when you also factor in confirmation bias and cognitive dissonance, you can see how some of the worst types of misinformation actually stems from these debates. Which would be where Community Notes could be particularly valuable, yet if we can’t agree on what the true facts even are in such cases, I guess it’s probably working as it should.

Does that make it a good solution? X has reduced its reliance on internal moderation for this reason, because it wants to allow users to decide what’s true and what’s not, and avoid making its own calls on the same. That’s why Elon Musk keeps complaining about government interference, or attempted censorship based on political requests, because his belief is that everyone should be able to view and hear all of the information available, and decide for themselves.

The problem is, that provides a leg-up to people that will blatantly lie to benefit their own agenda, as it allows such claims to get broad reach, often without being checked.

For example, Donald Trump claims to have won the last election. Many on the right will reinforce this, while those on the left will point to the recorded results.

Does that enable Community Notes agreement?

Expand the same to climate change, COVID, the war in Ukraine. There’s a whole range of divisive topics on which X is likely hosting a bunch of false claims, facilitating broad exposure, that are not getting “Community Noted” due to lack of consensus.

Maybe, that’s how it should be, but it does seem like there are some flaws that could become more significant in the wrong circumstances.